Equipment

OLAF’s Lens Art

This is a Geek Article, with very little practical information. But there are pretty pictures that non-Geeks might like. (Not the construction pictures, the ones further down.)

First, I should explain why I haven’t posted much lately. Lensrentals was able to expand into some adjacent space, which was desperately needed. But the testing and repair departments were moved and expanded into the new space. Over the last 10 days the second testing area went from this . . .

to this.

To this.

We were finally able to get some new repair workstations set up.

And moved OLAF to his own room where he’ll be joined shortly by our new MTF bench.

Pictures from OLAF

We did manage to squeeze in some time with OLAF to explore his capabilities a bit – although we aren’t done with that by any means. One of the first things we needed to do was set up standards for various lenses. Basically, this means testing known good and bad copies of different lenses so we can see what the reversed point spread images from OLAF should look like, and what they look like for different types of misalignment.

People like to think that adjusting a lens just means turning a knob somewhere and making all of the the points nice and sharp. That is sort of true in the very center of the lens (not the turning a knob part, but making the central point nice and sharp).

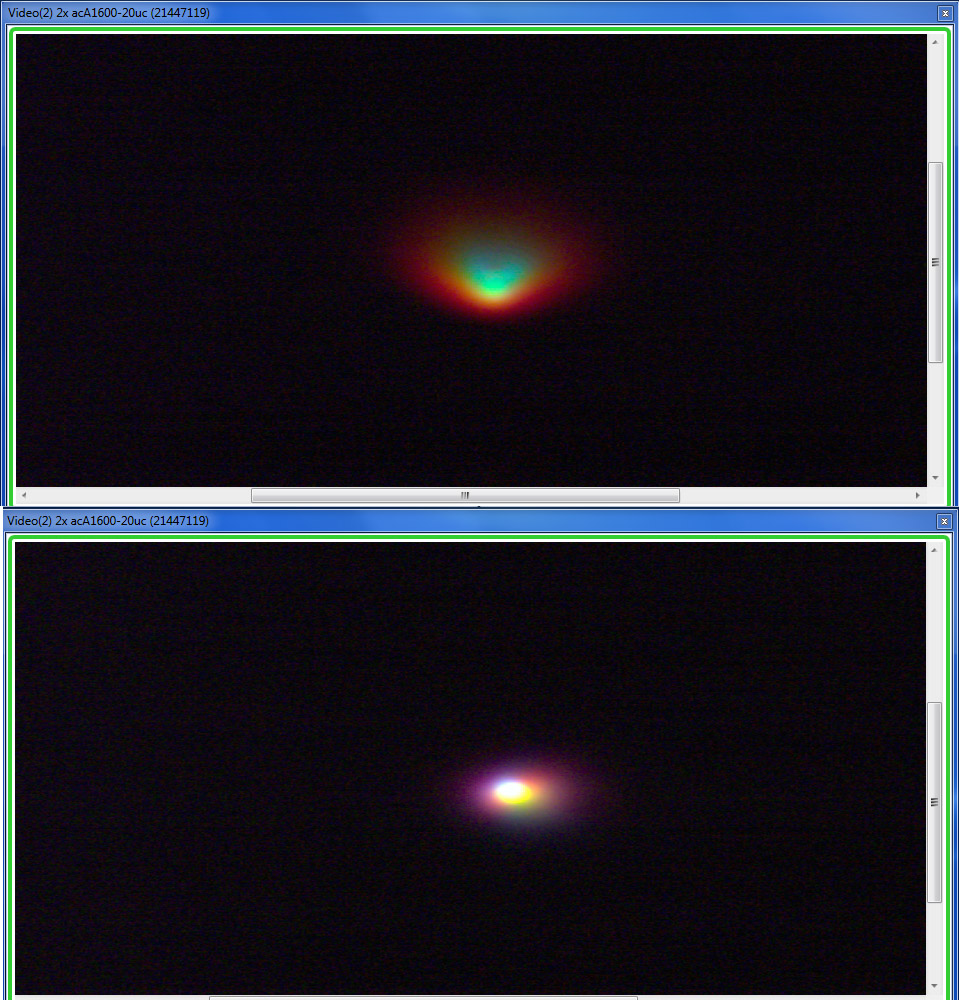

- Right at the center, every lens is capable of resolving a clear point. This image shows the center point of a lens before (top) and after (bottom) adjustment.

For a few lenses, like the Canon 50mm f/1.2 L and Nikon 70-200 f/2.8 VRII, sometimes that’s all that’s needed. OLAF is a champ with this kind of thing and lets us do these adjustments in minutes rather than hours.

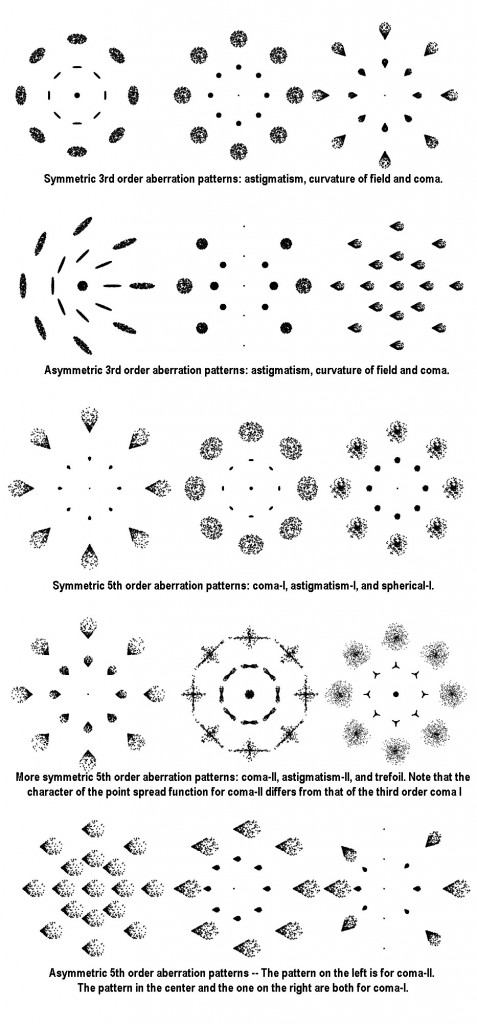

Unfortunately, centering one element is not the most common type of lens adjustment. Other adjustments require evaluation of things away from the center (properly called “off-axis”). Once you’re away from the center, five micron points don’t look much like points, even with very good lenses that are well-adjusted optically. In theory, a lens designer can calculate out what the various aberrations for a given lens should make a point look like off-axis.

- The above patterns taken from “Generic Misalignment Aberration Patterns and the Subspace of Benign Misalignment” Paul L. Schechtera and Rebecca Sobel Levinsona. Catchy title, isn’t it?

In reality, you don’t get one type of aberration at an off-axis point. You get a combination of several. Sometimes the lens designer uses one aberration to counteract another. Sometimes there will be two or three small aberrations instead of one large aberration, etc. Plus, when a lens has a decentered, tilted, or misspaced element, things get really interesting. The end result is that off-axis points on a real lens usually don’t look like any of the above patterns, especially when you look at them in color and can also see chromatic aberrations.

We just take the brute force approach, checking half-a-dozen known good copies of each lens to see what the off-axis patterns should look like, then compare that to the misadjusted lenses. While our database is far from complete, I find it fascinating to see how different lenses ‘interpret’ a point of light off-axis. It’s rather pretty, actually, so I thought I’d share a few images.

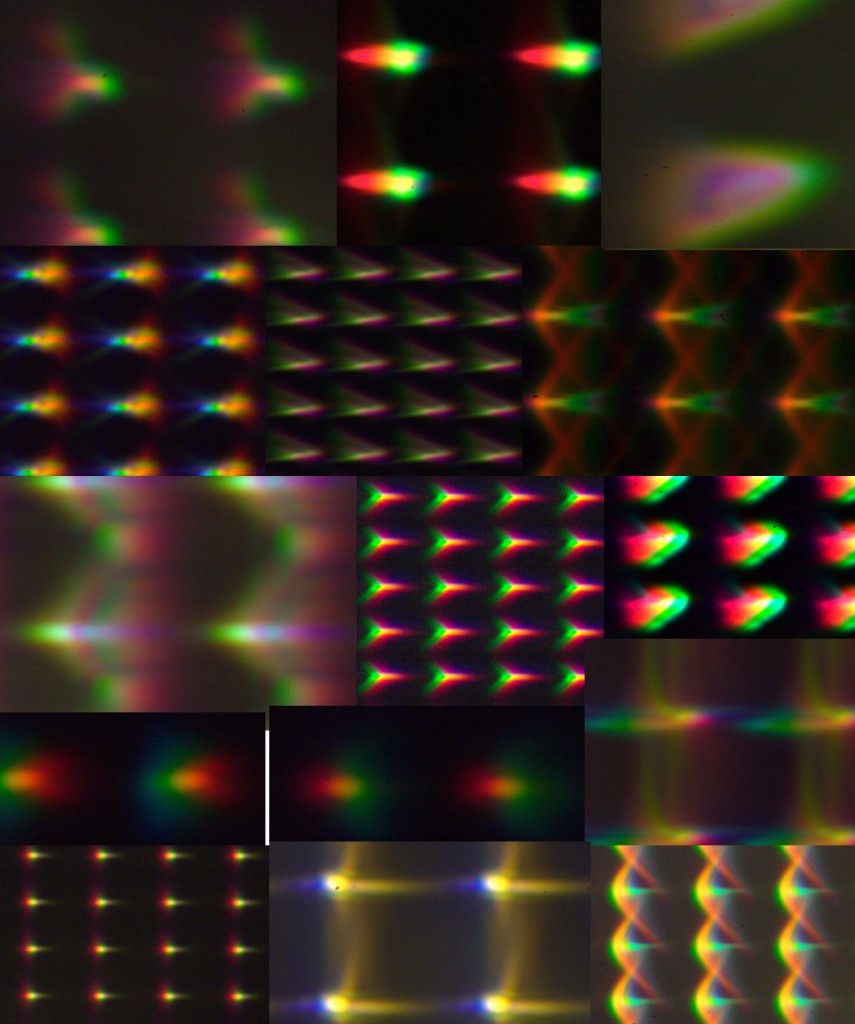

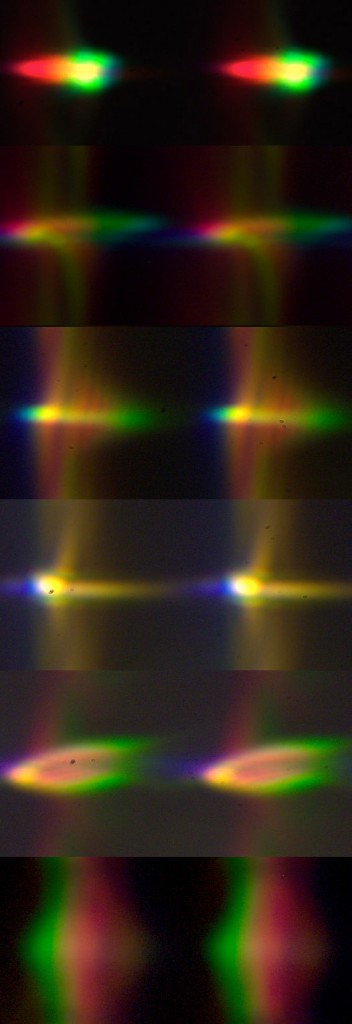

- What points near the edge of the frame look like for various lenses. When there are fewer, larger points the lens is wider angle (16 to 24mm in this picture), while smaller, closer points are longer lenses (70mm to 100mm).

The ‘points’ above are along the lateral edge of the field, from good-quality, well-adjusted lenses. Every one of those lenses resolves a nice round point in the center just like the ‘adjusted’ lens in the image above. This is what the ‘point’ looks like along the lateral edge. (These were all focused for the edge, so field curvature isn’t playing a part.)

Before you run screaming into the hills, remember these are magnifications of five micron points of light. Plus, the way OLAF is made overly enlarges the points of wide angle lenses, so those seem a lot worse. Also remember the pixels on your camera are likely larger than five microns, so a lot of the smearing you see at this magnification isn’t particularly meaningful.

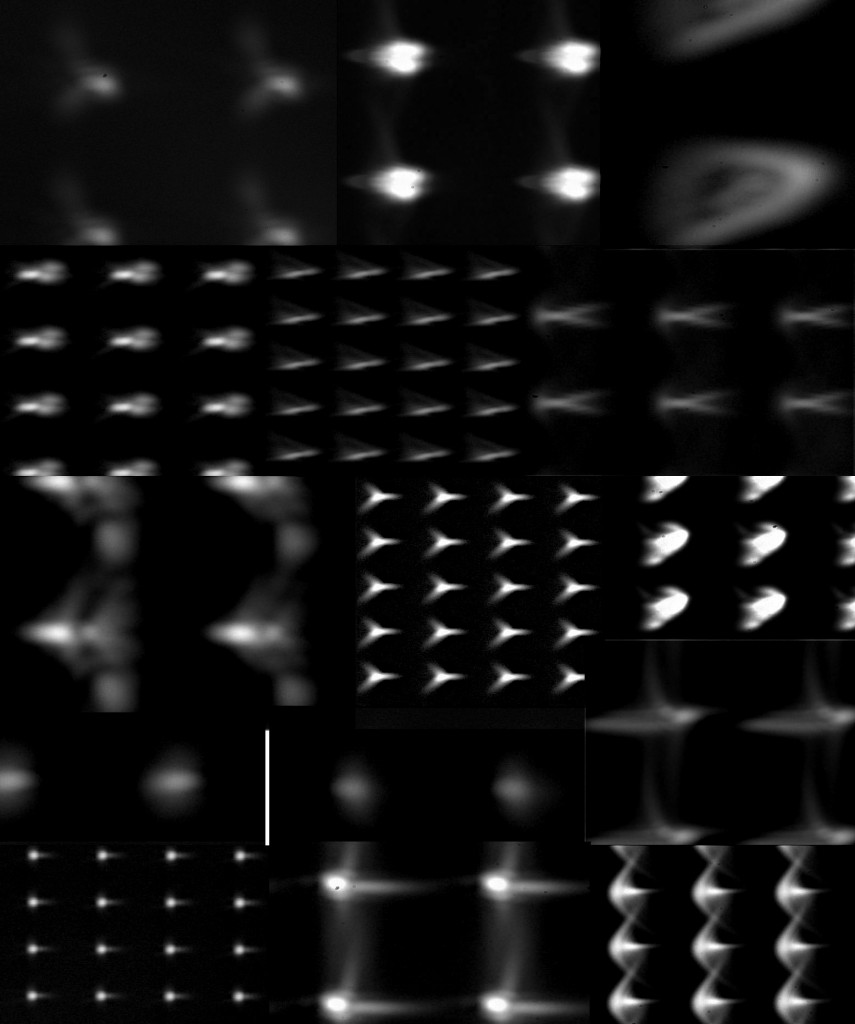

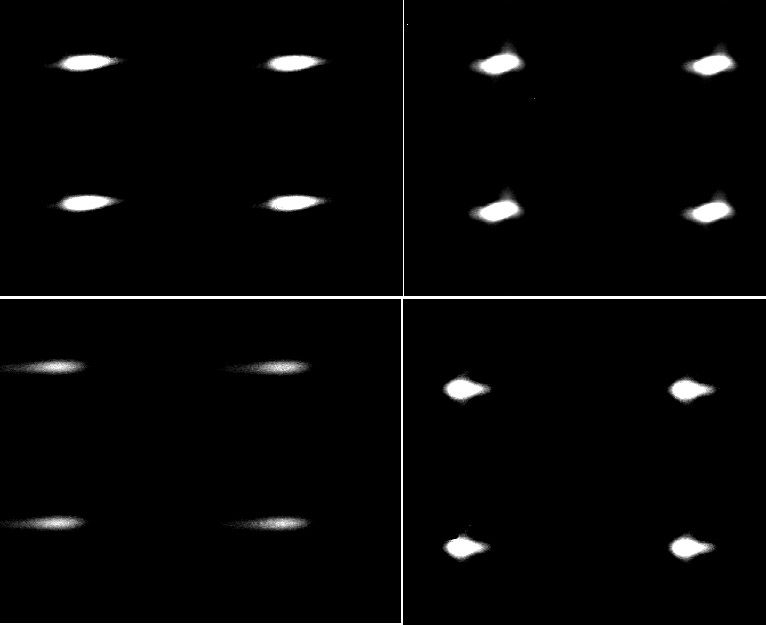

If I take that image above, convert it to black and white, and reduce the dynamic range about 25% in Photoshop, it looks more manageable. In fact, if you scroll back up, it looks a bit like some of the textbook pictures of various aberrations. Especially when you consider that most lenses have more than one aberration affecting off-axis points.

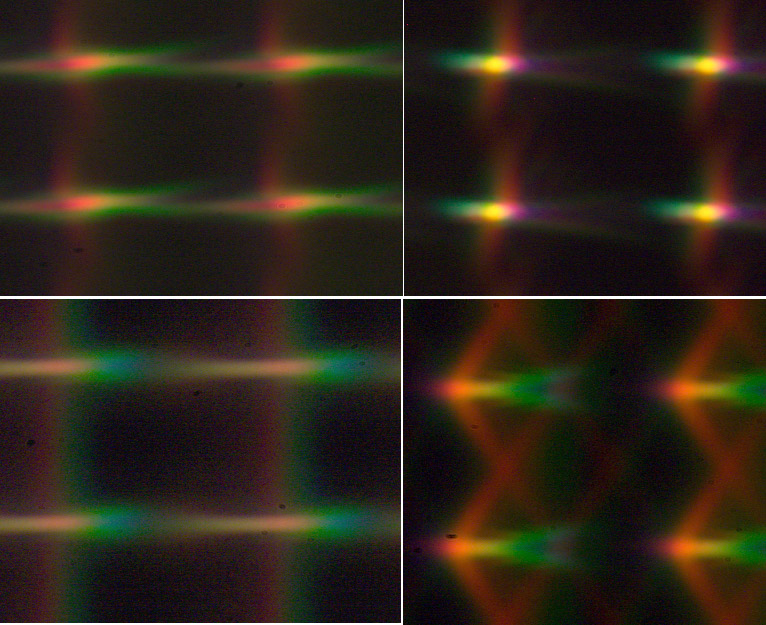

Anyway, the picture above showed a lot of apple-to-oranges comparisons – those were very different lenses. Looking at the outer pixels for some similar lenses gives an interesting apples-to-apples comparison. Below are right side pixels from half-a-dozen 35mm focal length lenses.

- Top to bottom: Sigma 35mm f/1.4; Canon 35mm f/1.4; Zeiss 35mm f/1.4; Canon 35mm f/2 IS; Samyang 35mm f/1.4; Canon 35mm f/2 (old version)

Here are lateral edge points from four different 50-something mm lenses.

- Clockwise from top left: Nikon 58mm f/1.4; Zeiss Otus 55mm f/1.4; Canon 50mm f/1.2 L; Zeiss 50mm f/1.4. All were shot at widest aperture, so the Canon 50mm f/1.2 is at a bit of a disadvantage.

I don’t think this is important data for evaluating lenses, or anything. But it is kind of interesting to see how a lens renders a point of light off-axis. I tend to roll my eyes a bit when I hear someone say, “This lens is just as sharp in the edges as it is in the center,” because no lens is. Ever. This explains why I prefer to shoot my subject in the center of my image and then crop for composition. I can tell myself those off-axis aberrations don’t really affect the image much — but I still know the round points are all in the center. Sometimes being a Geek has disadvantages.

On the other hand, looking at this you’d think no lens could ever resolve anything recognizable at the edge of the image. So just for fun, I took the 50mm images above, put them in Photoshop, converted to luminance channel, and knocked off the top and bottom 30% of the luminance with the levels command in about 14 seconds. Guess what the images above look like now? Yeah, they’re pretty much points. This is a simple kind of thing that any modern camera could (or does) do in firmware or we can easily do in postrpocessing.

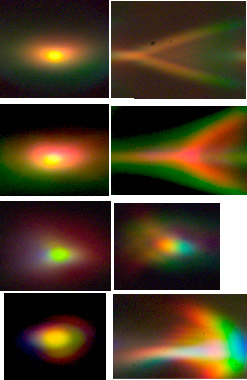

Does this have any point? Maybe a bit. For example, if you look at what a point looks like near the center of the image compared to one near the edge, you may understand why I’m such an advocate of using masks when I sharpen or otherwise post-process an image. I usually want more effect out near the edges and less near the center. Below are images for a central point and a lateral edge point for four lenses, all of which are optically good copies of what are considered excellent lenses. It doesn’t seem logical I’d want to apply the exact same manipulation to each.

This also demonstrates why in-camera image processing is becoming so popular with manufacturers. It’s pretty simple, with a lens database in place, to process the images so that they look better than they would without specific processing.

- The central point (left side) and one from the lateral edge (right side) for four different lenses.

We Do Really Use OLAF

Ok, the pictures and stuff are fun, but that’s not why I bought this machine (although the pictures are a nice side benefit). I bought it to adjust decentered lenses. For adjustments, all of the chaos of different patterns isn’t particularly important.

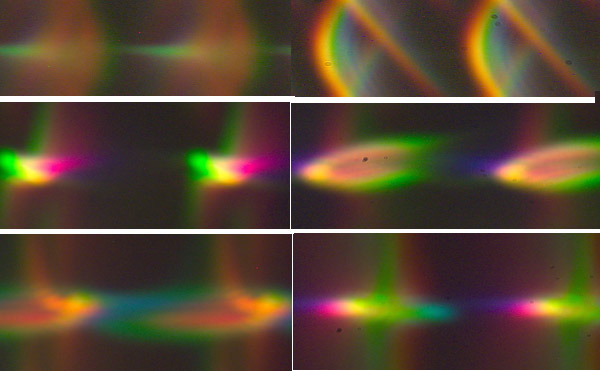

In addition to making the center point nearly a point, we get to look at the two sides and compare them. If the sides don’t look about the same, the lens isn’t quite right, even when the center has been adjusted to a nice point. Below are images comparing the left and right points from three different lenses. It’s pretty obvious that the sides aren’t alike and therefore the lens is optically out of sorts.

For the middle and bottom images, the points on one side are good and we simply need to get the opposite side to match. The lens in the upper image is really in a bad way and neither side looks anything like it should. This is when we pull up a saved file of what the lens should look like so we know what we’re trying to get to.

Of course, once we’ve got the two sides improved, we have to rotate the lens to several positions and make further adjustments until it’s the same in all quadrants.

It’s simple, although it’s often not easy. Each lens has different adjustable elements and each adjustment has different effects. Some lenses still take us hours to adjust properly. But being able to do those adjustments while we watch has reduced our optical adjustment times by 50%, which is a really big deal. Even more importantly, we’ve been able to correct a dozen lenses that neither we, nor the factory service center were able to adjust at all before.

It’s early in the learning process, so I can’t say with any certainty that OLAF is the universal answer to optical adjustments. But it’s certainly going to be a big help. More than that, though, it’s helping us learn more about lenses, so it’s already worth the money.

Roger Cicala

Lensrentals.com

April, 2014

Author: Roger Cicala

I’m Roger and I am the founder of Lensrentals.com. Hailed as one of the optic nerds here, I enjoy shooting collimated light through 30X microscope objectives in my spare time. When I do take real pictures I like using something different: a Medium format, or Pentax K1, or a Sony RX1R.

-

John C

-

Max

-

Graham Stretch

-

Kai Harrekilde

-

CarVac

-

Kevin Purcell

-

Paul B

-

NormSchulttze

-

David Miller

-

Lasse Beyer

-

CarVac

-

CarVac

-

anon

-

anon

-

Mike

-

Phillip Reeve

-

Kevin Purcell

-

Kevin Purcell

-

anon