Photo

Pay Attention to the Man Behind the Curtain

Metro Goldwyn Mayer

Metro Goldwyn Mayer

A Reasonably Non-Geeky Guide to Lens Tests and Reviews

Hardly a day goes by that on some forum somewhere a nasty argument is going on about lens testing and reviewing. (Going forward, I’m going to use the abbreviation R/Ts for Reviewers and Testers.) As with all things on the internet, these discussions tend to gravitate quickly to absolute black-and-white with no gray. This one’s great; this one sucks.

I made a bit of fun about his in a recent post. The truth is that most sites have worthwhile information for you, but none gives you all the information you might want. I’ve spent most of my life in various types of scientific research, and I’m accustomed to evaluating information from an ‘is this valid’ standpoint, not just ‘what does it conclude’. And because I get to see behind the curtain of the photography industry I have a different perspective than most of you. So I thought I’d share how I look at tests and reviews.

For the Paranoid Among You

I see this speculated about online sometime so let’s talk about the Pink Elephant in the room. These things are obvious facts of life. They aren’t massively influential like some people think they are, but they are real to some degree.

R/Ts like be first. First reviews get the most hits. Plus it’s cool to be the first one with a new toy. I’m guilty of this myself sometimes.

Manufacturers reward R/Ts. It may be getting a prerelease copy to write that first review. Maybe an invitation to be a paid speaker at a convention. some free loaner gear, or photography trip or two. Rewards and may have some influence, but there are no huge checks that make R/Ts say whatever the company wants, though.

There are occasional threats, too. Outright ‘cease and desists’ occur, although rarely (yes, I’ve gotten a couple). More often there is a discussion about why the manufacturer disagrees with something an R/T said. Sometimes the manufacturer is right, too. They have been with me a couple of times.

Most R/Ts don’t care much about rewards or threats. The biggest R/T sites basically get the best treatment from all manufacturers. Other R/Ts buy everything retail and won’t accept a loaner or any other reward to make sure you know they aren’t being influenced. You can usually figure this out pretty quickly if you read more than the graphs from their reviews.

R/Ts want to be credible. If they aren’t credible you won’t go to their site (with a couple of exceptions).

R/Ts do what they do for a reason. They are getting something out of it whether it’s direct income from advertising, publicizing their expertise, or even just getting a tax break for wallowing in their hobby.

The vast majority of R/Ts try hard to be impartial, but we all have our influences and preconceptions. If their income depends on ‘click-through’ purchases there may be a subconscious tendency to praise things a bit much. If they make their revenue from Google ads, generating controversy and lots of visits may be important.

Every one of us makes mistakes. I have, lots of times. I’ve seen others do it lots of times. I, and a lot of R/Ts tell you when we find an error. Some others quietly change results when they figure it out. Some others don’t ever realize they’ve made a mistake, or refuse to admit it.

Reviewing and Testing are way more time-consuming than you realize. Carefully testing or reviewing a lens takes dedicated, expensive equipment and a week or more of full-time effort. When you say ‘just test more copies’ or ‘why does it take so long’ you’re basically asking someone to cut corners and be sloppy.

The Persistence of Memory, Salvadore Dali

So What Do I Look At?

Everything I can. But I ‘consider the source’ for every bit of that information, because there are lots of good, but different, perspectives out there. There is a lot of disinformation, too. But in general, there are several broad categories of R/Ts that I look at, each with a slightly different perspective.

Crowdsourced Information

In some ways, crowdsource is the best information you can get because it summarizes a lot of people’s experience. The trouble is it takes a while to appear. Early adopters don’t have very much of this resource to go on.

It’s also important to know the sources of crowdsourced information and take it all with a grain of salt. There is a nice-sized cottage industry that posts positive and negative product reviews online for a fee at places like Yelp or Amazon. Positive and negative forum posts can be purchased, too. I don’t know if it happens in photography forums, specifically, but I don’t rule out the possibility.

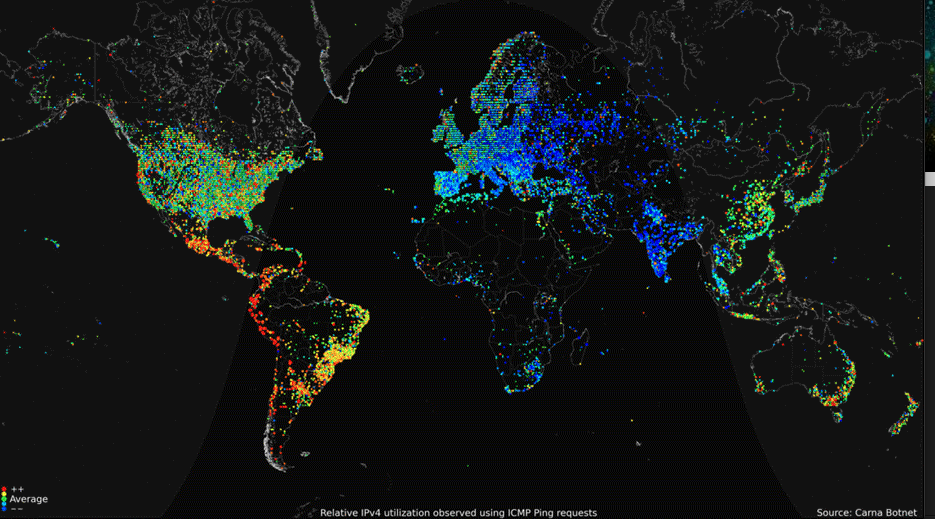

Internet activity at some given moment in time. I wonder who that guy is in the middle of the Sahara. Source: Carna Botnet via Science, 2013.

There are three types of online information I consider.

One Guy Said Online

This is really good information if you know the guy. If you don’t know them, at least by reputation, it’s probably not worth the electrons it’s printed on.

Lots of People Said Online

Once dozens or even hundreds of photographers have rated and commented on a given lens the information should be better than ‘one guy’. There’s going to be some bias, of course, reviewer expertise varies, and occasional mass hysteria does occur. But crowdsourced opinion does give you a good idea of how satisfied people are with their purchase.

The trouble is it’s fairly worthless right around release time. Fanboys and trolls dominate these discussions, and people are very willing to comment about what they don’t know about. One thing I do recommend: most crowdsourced review sites let you sort the comments by date. If the lens has been out a while, start with the newest reviews first. Far too many ‘reviews’ are written well before the lens was actually released for sale or during the hysteria around release time.

Lots of People Showed You Online

This is the best; the gold standard. You can look at actual photographs taken with the equipment you’re interested in. There are still shortcomings, of course. Online jpgs tend to make lenses with a high contrast look better than those with high resolution, for example. Postprocessing and photographer skill levels vary. But looking at a lot of images can give you the best idea about what a lens can do and whether it’s for you. More importantly, you actually know the person had the lens they’re commenting about.

Of course, us early adopter types don’t have a lot of online images to browse through. And, personally, I tend to get bored after a fairly short time and don’t look at all that many images. I also find myself creating self-fulfilling evaluations. If I want the lens, then bad shots are obviously by bad photographers and good shots reflect how good the lens is. Or vice-versa.

A Photographer Reviewed Online

I consider this is much different than ‘someone showed you images online’. Reviewers do their thing professionally. They have a site where you can find out about them: what they shoot with, how they make their living, what else they do. This is important to know because all reviewers will have some preferences and each will have a slightly different shooting style. Most take identical images in every review so you can get some direct comparison between this lens and that one.

A photographer’s review gives you the most information about what it’s like to use the lens in question. Here is where your find out things like how a lens handles, how quickly it autofocuses, how it feels in the hand, and how it compares to similar lenses the reviewer has used in the past. You’re also more likely to find out about color casts, bokeh, light flare, and behavior on specific cameras.

I would never buy a lens without either trying it myself or reading a couple of reputable reviews. OK, I would because I’m an incurable early adopter, often to my detriment. But I shouldn’t.

Critical reading is very important here. Far too often we get a huge forum debate about what “Joe The Reviewer” said, but nobody in the debate knows anything about Joe the Reviewer; they just read the summary of his review. Or more often, they just read someone else’s post about the summary of his review. What kind of photographer is Joe? I don’t mean good or bad. Does Joe work mostly in-studio, or do landscapes, or street photography, or just review lenses? If you shoot a fast-aperture, wide-angle lens in clubs at night, Joe’s review using it mostly stopped down for landscapes may not be pertinent.

I had an early experience with this when the late Michael Reichman, whom I had the utmost respect for, wrote a very positive review of the Canon 70-300mm DO lens. I bought it on the basis of that review (really just the summary of that review) and HATED it. Before I hopped on my local forum and started saying Michael must have been bribed by the camera company, or I must have a bad copy, I actually looked at the review more carefully. Michael had used the lens for images of large shapes with strong contrast and blacked-out shadows, never shooting into the sun. He instinctively knew the limitations of the lens and made great pictures with it. I was using the lens in an entirely different way, one that brought out all of its flaws.

| Editor’s Note | |

|---|---|

|

Upon reading this portion of Roger’s piece, I wanted to agree and interject my own opinions on the topic. As someone who has written for dozens of photography websites over the years, and written hundreds of articles, as an editor, I’ve always ensured that writers understood two things. First, the FTC requires that all reviews online that have been paid for by a company, are accompanied with ‘clear and conspicuous disclosures’. This means they’re required to state if they have been paid in any way for the product review. This can be as easy as stating “I received this new camera from ____ to test”, but it needs to be said somewhere to avoid a potential punishment up to $16,000. Secondly, I discuss credibility. Often, websites and other sources share credibility among all the writers and authors – less people read who the article is from than you might think. So it only takes one person to potentially ruin that credibility. While I can’t enforce their credibility, I do make sure that all gear for review comes from a third party (Often, companies I’ve worked for have dealings with B&H Photo or other large camera stores, where we can get 30 day loaners for gear reviews, without sacrificing impartial dealings by going through the manufacturer directly), and make sure they understand the stakes at hand.

That said, I’ve had companies in the past (I won’t name names), who have tried to directly bribe me with reviews by saying “We’ll send you this product to review, and if you like it…review it and keep it. If you don’t like it, just send it back”. This is absolutely bribery, and it absolutely happens. It’s important to know your reviewer, and not only trust their judgment, but their integrity as well. That said, the best way to develop an opinion on a piece of gear is to try it yourself. |

| Zach Sutton | |

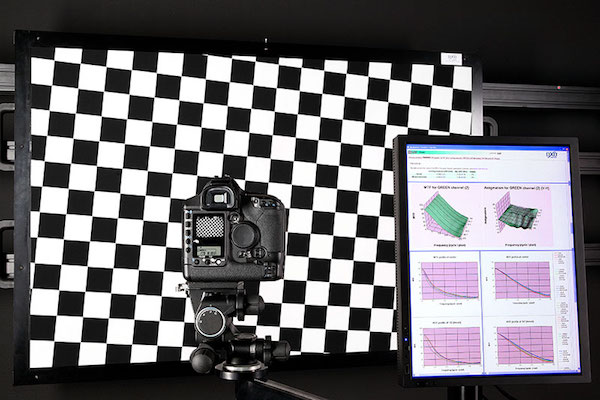

Imatest Tests Online

There are many of these sites. All have very good intentions, and most give very good information. BUT, and this is a HUGE BUT, all Imatest labs differ. The Imatest program always gives scientific appearing numbers and very pretty graphs. But few Imatest R/Ts tell you the very important details about how they tested. How far aways was the chart? What was the type, size, and quality of the chart? (A small inkjet-printed chart ISO 12233 chart will give very different numbers with the same lens than a large, linotype-printed SFR Plus chart or backlit film transparency chart.)

Lensrentals.com, 2013

Even different lighting can give different results. Then we have to consider if the images were raw or JPG files, and where the tester considered the corner and sides to be. For example, the ‘corner’ measured on an ISO 12233 chart in Imatest is in a very different place than the corner measured using an SFR Plus chart.

You also don’t even know if the corner number in that pretty graph compared and average of 8 measurements (horizontal and vertical in each corner), or just one. Did that center measurement average horizontal and vertical, or just give the higher of the two? Or was it and average of 4 (all 4 sides of the center box)? Oh, and speaking of that, the difference between the vertical and horizontal lines is NOT a direct measurement of astigmatism and anyone who claims it is, well, don’t put a lot of emphasis on their review.

There are at least a dozen other variables in Imatest testing. Most labs are consistent within the lab, except for variations in the camera used (which also makes a huge difference over time). So comparing Lens X to Lens Y using Joe’s Imatest Lab is a valid comparison. Comparing Joe’s lab numbers for lens Y to Bill’s lab numbers for lens Y is not. But if both Joe and Bill all say Lens X is sharper than Lens Y in the center but softer in the corners, then it probably is. And if Steve says so too, it almost certainly is. If Dave says it’s not, well maybe his copy wasn’t as good, or maybe his testing methods are different.

So, as someone who tested literally thousands of lenses for several years using Imatest, I recommend you take all of these sites in as a gestalt and do a kind of mental meta-analysis of them. There’s good general data there, but splitting hairs and over-analyzing it will lead you to a lot of wrong conclusions.

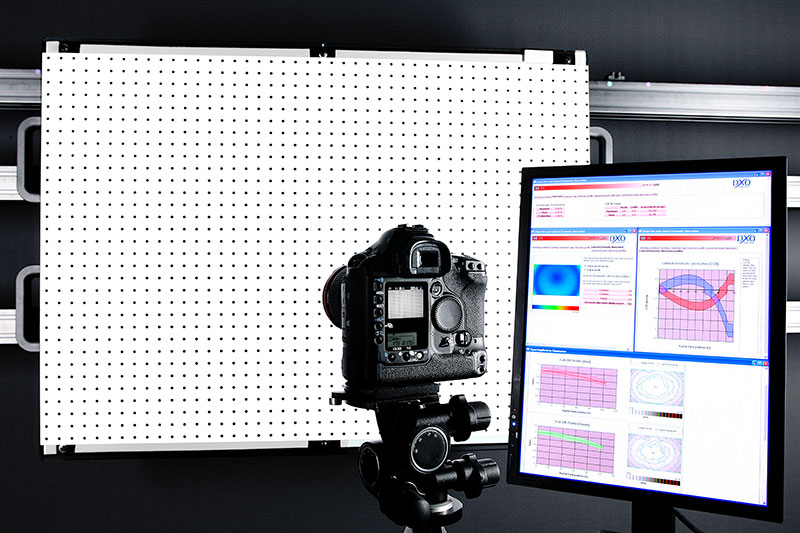

DxO Analytics Tests

Here’s something you may not know. DxOMark is not the only place to find DxO Analytics data. So there is a good confirmation source for DxO’s data that I use constantly. SLRGear/Imaging Resource uses DxoAnalytics targets and software for their tests and presents them in a nice, easy-to-use format. The also are very transparent about the tests they use and the way they perform them, which gives me a high comfort level with their results.

In general, DxO Analytics is a computerized target analysis, like Imatest. There are a variety of targets for different tests, including dot targets and slant edge targets, among others.

This gives some different information than most Imatest reviews and it is presented in DxO’s proprietary ‘blur units’ rather than more standardized MTF or MTF 50 results. We all know DxOMark likes to compress everything down to a single, and in my opinion less than useless, number. (Well it has some use since we immediately know that someone who says “DxO rated it as 83.2, the best lens ever!!!!” is a fanboy who doesn’t understand testing at all. Resist the temptation to try to reason with unreasonable people.)

But if you dig deeper, there’s lots of good information: how the lens performs as you stop down, a nice look at the sharpness over the entire field, and other goodies. It’s often more in-depth information than what you get from most Imatest sites.

I use DxO to expand on data from Imatest testing sites. It may give a more complete picture, but not a more accurate picture. There are lots of Imatest sites and that dampens the ‘copy variation’ problem down a lot. There are only two DxO sites so when they test a bad copy, there’s not 6 others to compare it to.

As an aside, nobody, no matter how much testing equipment they have, can take a single copy of any lens and decide it’s a good or bad copy. They can rule out horrid decentering or tilt, or truly awful performance. But that’s about it. They may have the best copy out of 20, or the worst. Trust me on this. There’s a reason I will no longer publish ANY single lens performance data; because I’ve been burnt by publishing data when I only had a single copy and thought it was good.

Also please don’t compare my series of 10 and say they should do that, too. I publish very limited data on a larger number of lenses, but I don’t go into the depth and detail that an Imatest or DxO site does. Not even close. They probably spend as much time testing their one copy thoroughly as I do just doing MTF curves on 10 copies. Like I said at the beginning, we’re each showing you different things.

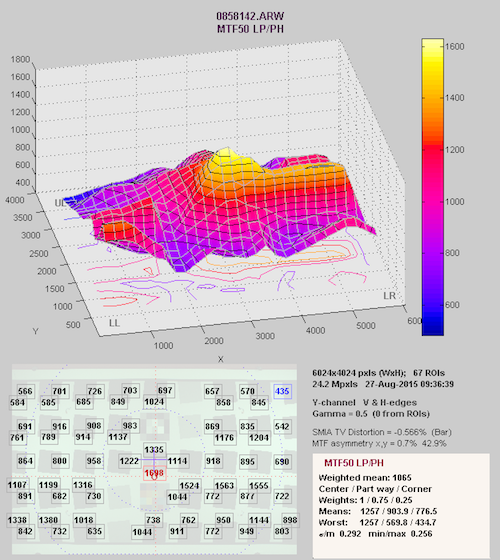

Lensrentals Optical Bench Tests

First, we don’t pretend to be, nor intend to become, a full R/T site. We complement them, not replace them. We’re doing somewhat experimental and geeky stuff for our own purposes and letting you guys look over our shoulder. The feedback we get helps us refine our methodology. But our results, while not a true lens test in the reviewer sense, do give you some very different data that I think is worthwhile. How is it different?

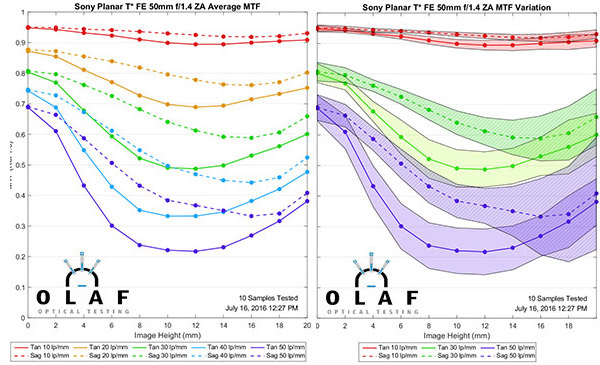

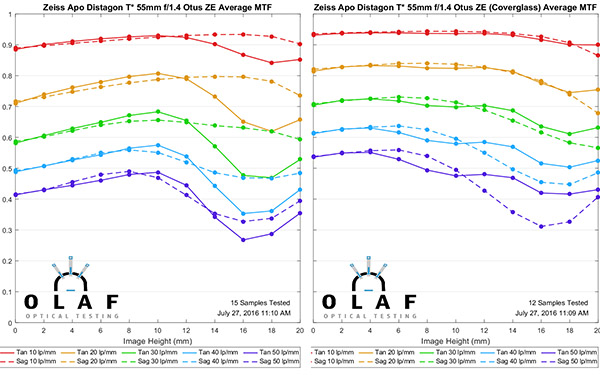

OlafOpticalTesting, 2016

- There is no camera. This is good because it eliminates all of the variables of testing different lenses on different cameras. It’s bad, because many of you want to know how the lens is going to behave on your camera.

- It’s done at infinity. For most lenses this probably makes no difference. But for Macro lenses, designed to work close up, I’d put less emphasis on our infinity testing. For wide-angle lenses, I’d put more emphasis on our optical bench testing, since target analysis testing will be very close, sometimes at 4 or 6 feet, focusing distance.

- Because it’s experimental and evolving, we’ve refined techniques so some older results aren’t correct, sort of like older Imatest results done on a last generation camera aren’t the same as tests done on a new camera. For example, I just realized (after a subtle hint from Zeiss) that our 55mm Otus results were done before we started rigorously testing every lens with and without cover glass. It turns out the Otus is a better performer with glass in the path, as you can see below, so the data on our website has been incorrect for a year. We screw up sometimes. That’s what happens when you do new stuff.

- We only provide very limited testing. Basically at this point we give you wide-open MTF and variation data. And if you don’t like MTF graphs, well, other than ‘higher is better’ you don’t get much out of our data. If you speak MTF, then you get more information than you would out of Imatest or DxO graphs.

OlafOpticalTesting, 2016

So when is our data important?

- It’s really the only data for copy-to-copy variation out there.

- It’s multiple copy data, so it tends to smooth out the sample variation issue. Thought of another way, while we present only one small type of test, we do it on as many copies as you’re likely to see from all the other R/T sites combined.

So I look at our data to see about sample variation, and to look at the MTF curves. That’s about it unless you’re into the more geeky part of testing. Now, because I’m into engineering quality I’d look at our teardowns, too, because there’s nowhere else to find that kind of information. But that’s not really testing. That’s just cool geeky stuff.

TL; DR Version

There’s a lot of information put out there. Most of it is reasonably accurate. Not one site or place is the ‘correct’ one. No single review is the ‘best’ one. It’s like real life. Each of them gives you some information that you can choose to use, or not use, when you make your decisions.

If you’re an intense early adopter, well, just go ahead and get the lens. There’s no sense making yourself crazy trying to justify why you want it, and there’s no way to know, absolutely know, if it’s right for you without using it yourself doing the kind of photography you do.

If you want to make a logical, rational decision about which lens you should get, and it’s not ‘right-around-release-time-insanity’ o’clock, then do some intelligent reading. Screen several Imatest and DxO Analytic sites and get a feel for what they think of the lens. Look at Lensrentals data and see about variation and if, perhaps, there may be some difference between close and infinity performance. (For example, if we find the lens is better than most, but all the Imatest sites say it isn’t, there’s a good chance the lens is better at infinity than it is close up.)

Then read some real-people reviews, looking at more recent ones first and discounting, as much as you can, the ones that came out right around release time when fanboys, trolls, and maybe worse were clogging up bandwidth. Finally, read a couple of professional photography reviews and get some more input on how the lens handles and behaves in various conditions, and look at some photo sites for images taken with the lens. Remember that online jpgs, unless they let you download 100% images, aren’t going to tell you a lot about how sharp the lens is (although it may expose some flaws). But they should give you an idea about color, flare, and bokeh at the very least.

Or, be a fanboy, find one site that says exactly what you want to hear, and go post on your favorite forum that you have found The Truth and all other sites are incompetent liars.

Roger Cicala

Lensrentals.com

August, 2016

Author: Roger Cicala

I’m Roger and I am the founder of Lensrentals.com. Hailed as one of the optic nerds here, I enjoy shooting collimated light through 30X microscope objectives in my spare time. When I do take real pictures I like using something different: a Medium format, or Pentax K1, or a Sony RX1R.

-

Mark

-

Afsmitty

-

Brandon Dube

-

Brandon Dube

-

Edna Bambrick

-

Brandon Dube

-

Edna Bambrick

-

Edna Bambrick

-

Roger Cicala

-

Edna Bambrick

-

Edna Bambrick

-

Edna Bambrick

-

Scintilla

-

obican

-

obican

-

Roger Cicala

-

Franck Mée

-

Andre Yew

-

l_d_allan

-

l_d_allan

-

Andre Yew

-

l_d_allan

-

taildraggin

-

Roger Cicala

-

l_d_allan

-

Roger Cicala

-

John Dillworth

-

Franck Mée

-

sala.nimi