Technical Discussions

Good Vibrations: Designing a Better Stabilization Test (Part I)

My name’s T.J. Donegan, I’m the Editor-in-Chief of DigitalCameraInfo.com and CamcorderInfo.com (Soon to just be Reviewed.com/Cameras). We recently wrote about designing our new image stabilization test for our Science and Testing blog. I showed it to Roger and he asked for the “nerd version.” He was kind enough to let us geek out about the process here, where that kind of thing is encouraged.

Since the beginning of DigitalCameraInfo.com and CamcorderInfo.com, we’ve always tried to develop a testing methodology that is scientific in nature: repeatable, reliable, and free from bias. While we do plenty of real-world testing during every review, the bedrock of our analysis has always been objective testing.

One of the trickiest aspects of performance to test this way is image stabilization. Things like dynamic range, color accuracy, and sharpness are relatively simple to measure; light goes in, a picture comes out, and you analyze the result. When you start introducing humans, things get screwy. How do you replicate the shakiness of the human hand? How do you design a test that is both repeatable and reliable? How do you compare those results against those of other cameras and the claims of manufacturers?

Our VP of Science and Testing, Timur Senguen Ph.D., shows our new image stabilization testing rig in action.

It’s a very complex problem. The Camera & Imaging Products Association (CIPA) finally tried to tackle it last year, drafting up standards for the manufacturers to follow when making claims about their cameras and lenses. We’re one of the few testing sites that’s taken a crack at this over the years, attempting to put stabilization systems to the test scientifically. Our last rig shook cameras in two linear dimensions (horizontally and vertically). It did what it set out to do—shake cameras—but it didn’t represent the way a human shakes. Eventually we scrapped the test, tried to learn from our experiences, and set out to design a new rig from scratch.

Shake, Shake, Shake, Senora

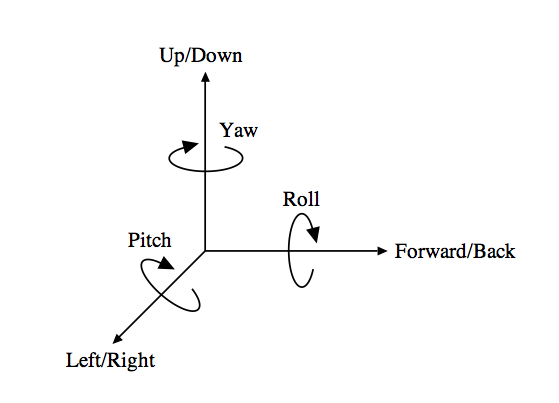

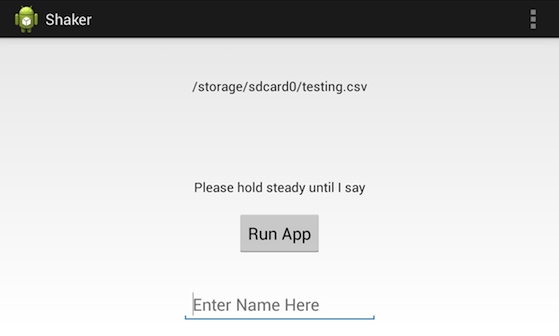

Our VP of Testing and Science, Timur Senguen, Ph.D. (We just call him our Chief Science Officer, because we’re a bunch of nerds) wrote up an Android application that would use the linear accelerometers and gyroscope in an Android phone to track how much people actually shake when holding a camera. This allows us to see exactly how much movement occurs in the six possible dimensions—both linear (x, y, and z) and rotational (yaw, pitch, and roll).

Using the program on an Android phone we tested the shaking habits of 27 of our colleagues, using a variety of grip styles and weights across the camera spectrum. We tested everything from the smartphone alone up to a Canon 1D X with the new 24-70mm f/2.8L attached to see how people actually perform when trying to hold a camera steady. (The 24-70mm isn’t stabilized, but this was just designed to be representative for weight and grip style.)

You can actually play along at home here if you like. Timur’s .apk is available here, which you can install on any Android phone and run yourself. You can hold the phone like a point-and-shoot, or you can do what we did and attach the phone to the back of a DSLR to get data on how much you shake when using a proper camera and grip (to keep the weight as close as possible, remove the camera’s battery and memory card). When you’re done you can send your results to us with the name of your camera and lens and we’ll use your data for future analysis. Bonus points if you jump in the line and rock your body in time. (Sorry, I had to.)

Roger’s Note: One of the reasons I’m very interested in this development is this: my inner physician is very aware that type, frequency, and degree of resting tremor varies widely in different people, especially with age. A larger database of individual’s tremor might help identify why some people get more benefit out of a given IS system than others – and hopefully some day you’ll be able to use an app like this to define your own tremor and then look at reviews to see which stabilization system is best matched for it. Or even adjust your system’s stabilization to best match your own tremor (we all have one) like we currently adjust microfocus. So I encourage people to download the app and upload some data.

What we found is actually quite interesting. First, people are generally exceptional at controlling for linear movement (up and down, side to side, and forward and back). We are about ten times worse at controlling for yaw and pitch (the way your head turns when you shake your head “no” or nod your head “yes”). We are about roughly four times worse at controlling for steering wheel-type rotation, which you can fix with any horizon tool on your computer.

We also found that weight is not actually a huge factor in our ability to control camera shake. The difference between how we shake a 2300 gram SLR like the 1D X and a 650 gram DSLR like the Pentax K-50 is actually very minimal. It passes the smell test: When you’ve got a hand under the camera supporting it, you’re going to have a limited range of motion; when you’re holding a point-and-shoot pinched between your thumbs and index fingers, you have no support and thus shake significantly more, even though the camera weighs significantly less.

Our findings also showed that, for yaw and pitch, we typically make lots of very small movements punctuated by a few relatively large ones. When we plotted the frequency of shakes against severity, we got a very nice exponential decay curve. All of our participants produced a similar curve, with experienced photographers (and the uncaffeinated) having a slight advantage.

Once we had our sample data, it was a simple matter of building the rig. (At least Timur made it look simple. I don’t know. He built it in two days. The man’s a wizard.) His rig is designed to accommodate all six axes of movement, though based on our findings we stuck with just yaw and pitch since they’re the only significant factors. While Olympus’ 5-axis stabilization is intriguing (and likely better if you have a condition that causes linear movement, such as a tremor), the limited linear movement we subject cameras to only really makes a difference at extreme telephoto focal lengths. We then tested the rig using the same Android application that we used for our human subjects and fine-tuned the software so that the rig accurately replicated the results.

With our rig built and calibrated, we then had to design a testing methodology. We first looked at the standard drafted by CIPA last year. They confirmed our primary findings—that yaw and pitch are the main villains of camera shake—but we took issue with some of their methods.

First, they use two different shaking patterns based on weight (one for cameras under 400 grams, one for cameras over 600 grams, they use both if it falls in the middle), which we found wasn’t a contributing factor in camera shake. We have two shake patterns, but one is reserved just for smartphones and gripless point-and-shoots, regardless of weight.

For actual analysis, they also use a very high-contrast chart and a rather obtuse metric they devised, which translates as “comprehensive bokeh amount.” You can read all about it here. It’s fairly convoluted, and we ultimately decided to not go that way.

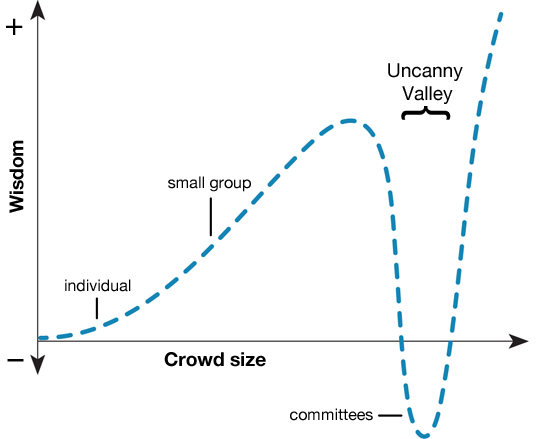

The CIPA standard does actually have quite a bit going for it, especially for something that came out of a committee. (If there’s wisdom in crowds, then committees are the uncanny valley.) It’s certainly far better than the convoluted battery test, which calls for fun things like always using the LCD as a viewfinder, turning the power off and on every ten shots, moving through the entire zoom range whenever you turn the camera on, and basically all the things you never actually do with your camera. This is what usually happens when 27 engineers from 19 different companies try to come to a consensus.

We primarily use Imatest for our image analysis, so we’ve settled on a methodology that closely aligns with the one outlined here, by Imatest’s creator, Norman Koren. It involves looking at the detrimental effect that camera shake has on sharpness, using the slanted edge chart that we already use for resolution testing.

We’re still in the process of beta testing our rig, but we’ve begun collecting data on cameras we have in house. We’re not yet applying these results in our scoring, but we’ll be back soon to describe some of our findings in part II.

T. J. Donegan

July, 2013

Author: tjdonegan

-

xv

-

Ron Miller

-

Ilya Zakharevich

-

Andre

-

Mark Turner

-

Scott McMorrow

-

Scott McMorrow

-

stigy

-

Aaron Macks

-

Andre

-

Scott McMorrow

-

Andre

-

Scott McMorrow