Lenses and Optics

Geeks Go Wild: Data Processing Contest Suggestions

A couple of years ago I gave a talk on the history of lens design at the Carnegie-Mellon Robotics Institute. The faculty members were kind enough to spend the day showing me some of their research on computer-enhanced imaging. I’m a fairly bright guy with a doctorate of my own, but I don’t mind telling you by the end of that I was thoroughly intimidated and completely aware of my own limitations.

I’d love to tell you I gave a brilliant and entertaining talk that evening, but there were a lot of witnesses, so I’d better not lie that openly. I think only the fact that they serve cookies at the end of the talk kept most of the audience in place until I finished. I do remember, though, looking up at that room full of brilliant scientists and starting my talk with, “It somehow seems wrong that the guy with the lowest IQ in the room is the one giving the lecture.”

Two weeks ago I asked some of the more computer-literate people who read my blog to help me handle all the data our new optical bench generates. Over 70 people asked for the data sets, and 40 of those sent their ideas back to me. After spending the last five days poring over all of those suggestions, I feel just like I did that day at Carnegie-Mellon. I’m thoroughly intimidated and wondering why the participant with the lowest IQ is the one writing the blog post.

Mostly, though, I’m left with an incredible feeling of Internet camaraderie. Sure, there were some prizes offered, but the amount of time dozens of spent preparing contributions and sharing ideas dwarfed the insignificant prizes I offered. Several people sent 20+ pages of documentation along with their methods. (I don’t mind admitting I had to get out my college statistics books to help me translate some of these.)

Some submissions just suggested methods to display data for blog reports. Others focused on methods for decentered or and inadequate lenses within a batch. I’m going to show some of the data displays, because that’s the part I want reader input on. Let me know what methods you think provide you the most information most clearly.

I’ll also mention several of the submission that went way past just graphically representing the data (although most included that, too). I’ll mention these at the end of the article when I discuss the Medal Awards. Don’t mistake me leaving them to the end to mean I wasn’t overwhelmed by them. Honestly, several of them completely changed my thinking about what the best ways to detect bad lenses are, and what the most important data to share with you is.

But really, I’d like to give everyone who sent in a contribution something, because every single one helped me learn something or clarified ideas for me. It also reminded me why I do this stuff — because deep under all of the Google Adwords, the best part of the Internet lives — the part where people freely share their knowledge to help other people. Very cool things come out of that part of the Internet, and that’s the part I want to hang out in.

I also want to be clear that while those submitting gave me permission to reproduce images of their suggestions here, the images and intellectual property rights of their contributions remain theirs. You need their permission, not mine, to reproduce their work.

What I’ve Already Learned

A couple of general suggestions have been made by several people, and make so much sense that we’ve already adopted them.

From now on we’ll test every lens at 4 points of rotation (0, 45, 90, and 135 degrees). This will give us an overall image of the lens that includes all 4 corners as mounted on the camera.

Displaying 4 separate line pair/mm readings makes the graphs too crowded, so we’ll probably use 3 going forward. I’m still undecided whether that should be 10,20, and 30 or 10, 20, and 40 line pairs/mm.

Displaying MTF50 data, or more likely, Frequency response graphs, is very useful and needs to be included along with MTF curves.

We knew that each lens’ asymmetry as we rotate around its axis is a very useful way to detect bad lenses. Some contributors found looking at the average asymmetry of all the copies of a certain lens is a good way to compare sample variation between two groups.

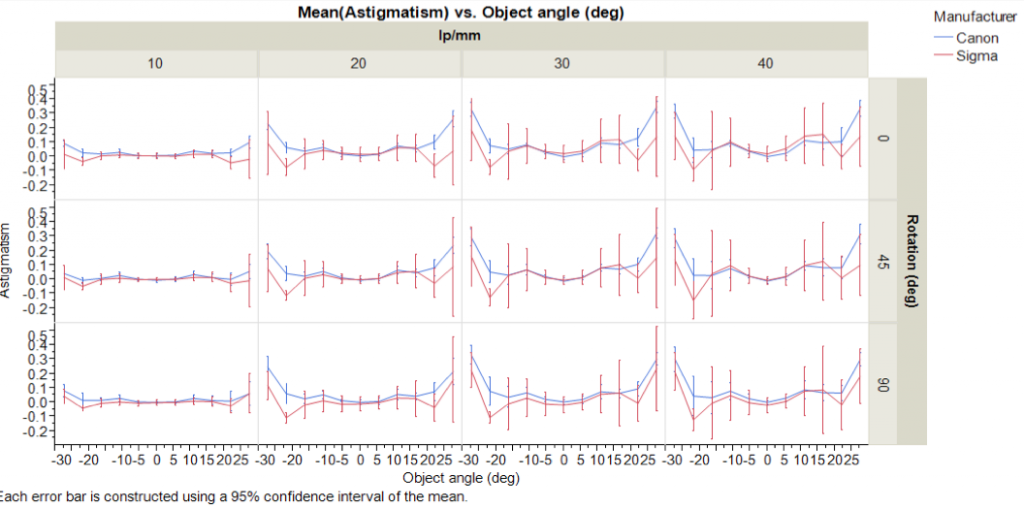

Variation of astigmatism, both between lenses of the same type and comparing groups of different type, is also worthwhile measurement to report.

Outside of that, nothing is written in stone, and I look forward to your input about the different ways of displaying things. You don’t have to limit your comments to choosing just one thing. We want the best possible end point, so taking the way one graph does this and combining it with the way another does that is fine.

Several people found similar solutions separately, so where possible I’ve tried to group the similar entries. I apologize for not showing all the similar graphs, but that would have made this so long I’m afraid we would have lost all the readers. I can’t give you cookies for staying until the end of the article, so I’ve tried to keep it brief.

MTF Spread Comparisons

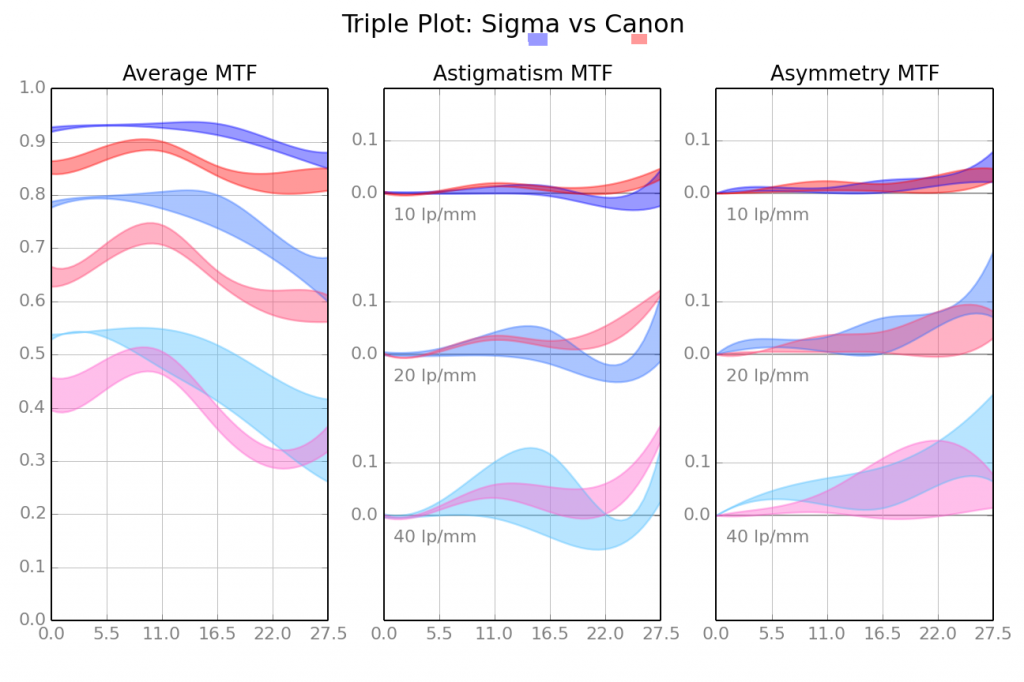

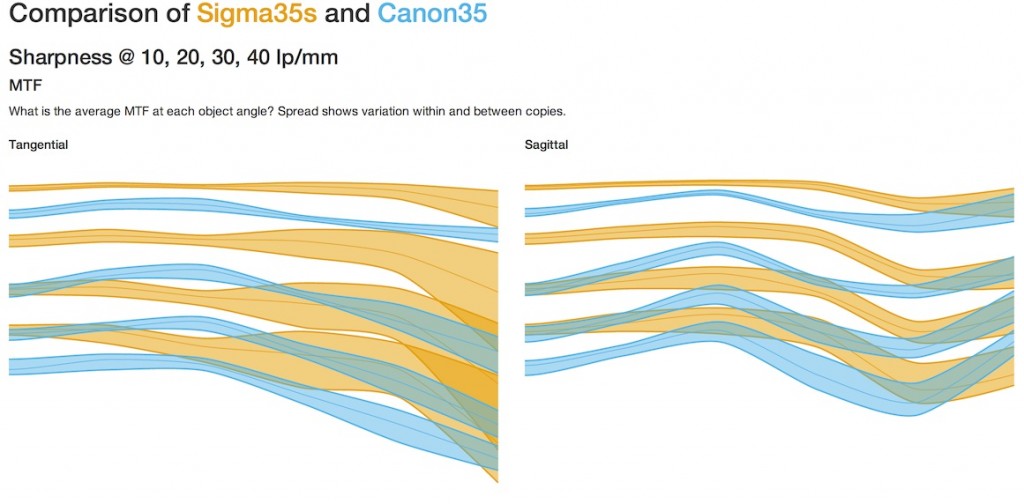

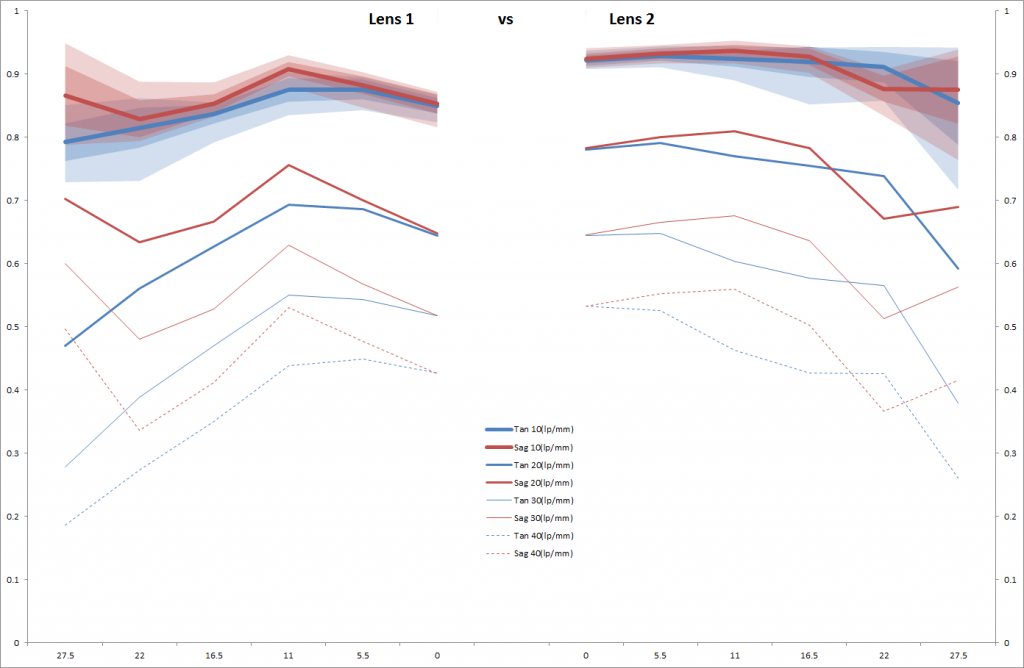

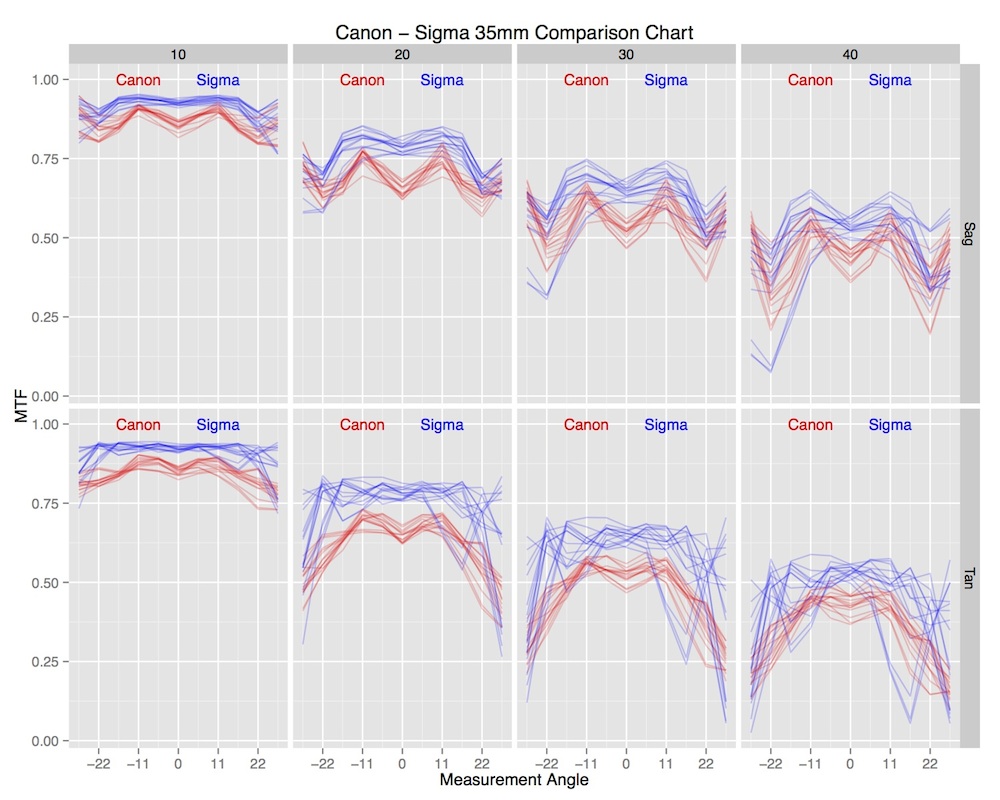

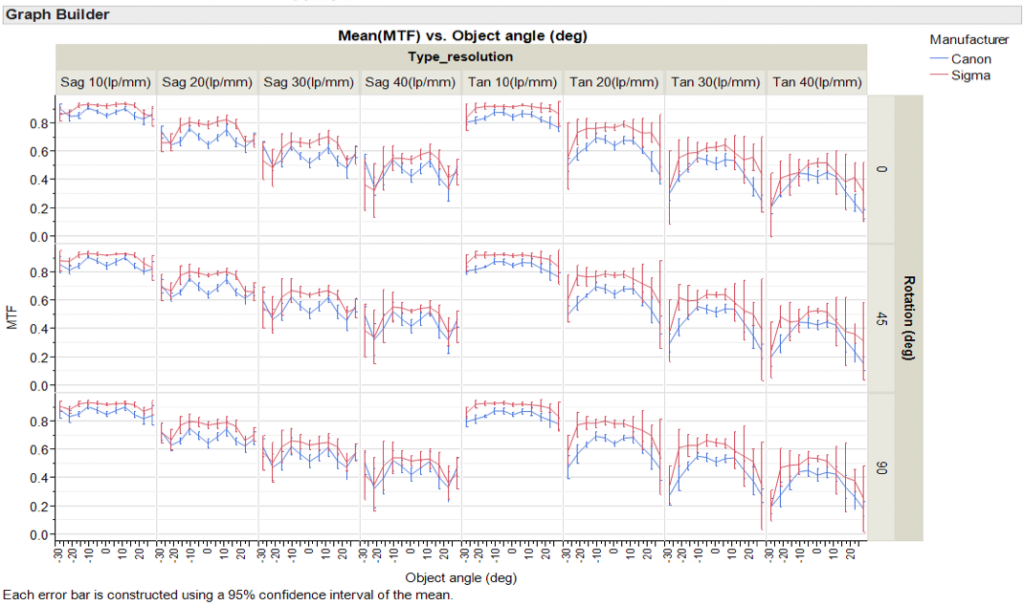

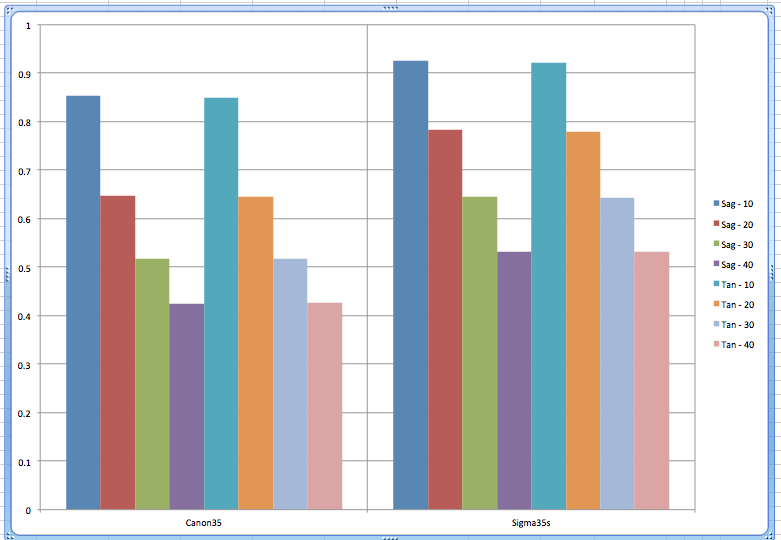

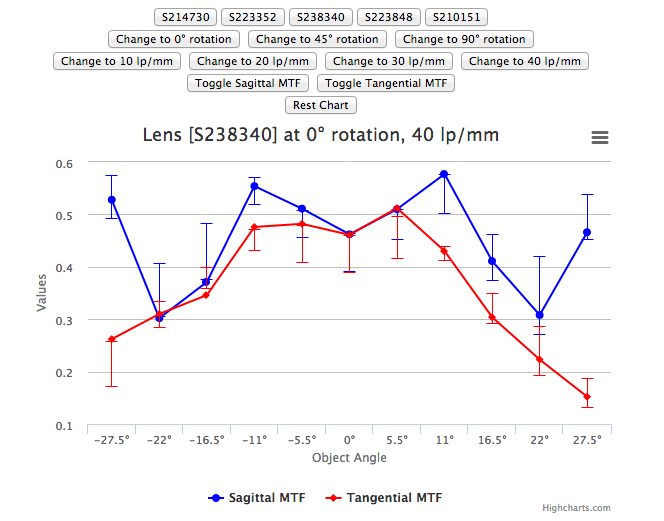

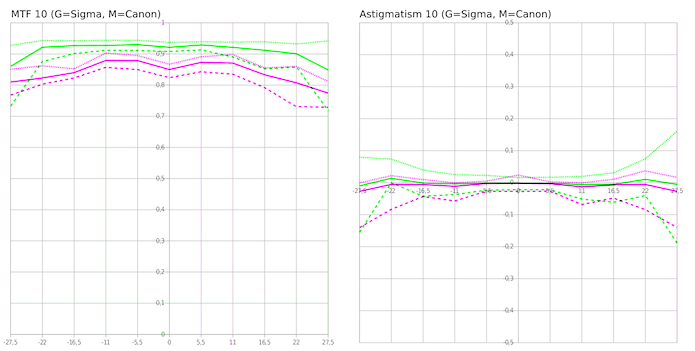

These graphs show the range or standard deviations of all lenses of that type, while comparing the two different types of lenses. Obviously they aren’t all completely labeled, etc., but they all give you a clear idea of how the data would look. Remember, the data is for just 5 Canon 35mm f/1.4 and 5 Sigma 35mmf/1.4 Art lenses, and I purposely included a mildly decentered copy in the Sigma lenses. Please don’t consider these graphs are anything other than a demonstration — they are not a test of the lenses, just of ways of displaying data and detecting bad copies.

Area Graphs

Several people suggested variations using a line to show the mean value and an area showing the range of all values. I won’t show all their graphs today, but here are some representative ones.

Curran Muhlberger’s version separates tangential and sagittal readings into two graphs, comparing the two lenses in each graph.

Jesse Huebsch placed sagittal and tangential for each lens on one graph and then placed the two groups side-by-side to compare. (Only the 10lp/mm has range areas. The darker area is +/- 1 SD, the lighter area is absolute range.)

Winston Chang, Shea Hagstrom, and Jerry all suggested similar graphs.

Maintaining Original Data

Some people preferred keeping as much of the original data visible as possible.

Andy Ribble suggests simply overlaying all the samples, which requires separating out the different line pair/mm readings to make things visible. It certainly gives an intuitive look at how much overlap there is (or is not) between lenses.

Error Bar Graphs

Several people preferred using error bars or range bars to show sample variation.

I-Liang Siu suggests a similar separation, but using error bars rather than printing each curve the way Andy suggested. For this small sample size the error bars are large, of course, especially since I included a bad lens in the Sigma group. But it provides a detailed comparison for two lenses.

William Ries suggested sticking with a plain but clear bar graph. Error bars would be easy to add, of course.

Aaron Baff made a complete app that lets you select any of numerous parameters, graphing them with error bars. While it’s set up in this view to compare a specific lens (the lines) to average range (the error bars), it would function very well to compare averages of between two types of lenses.

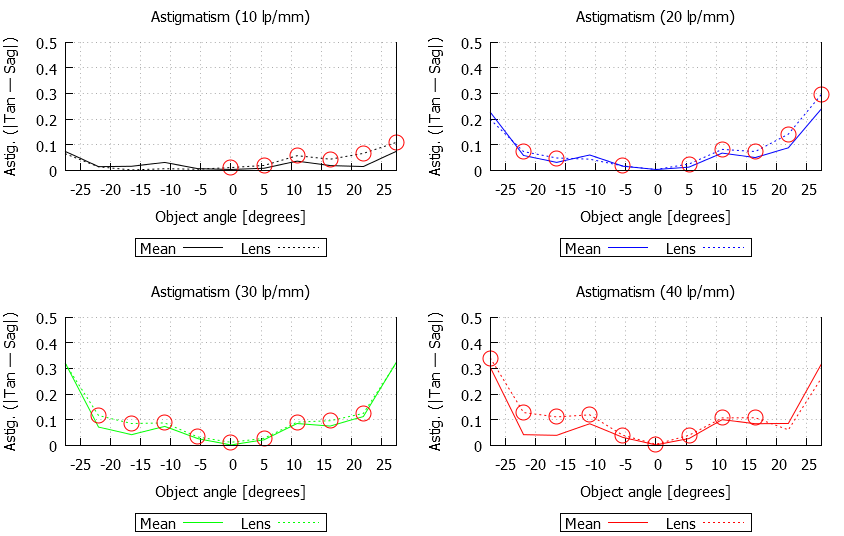

Separate Astigmatism Readings

Several people suggested that astigmatism be made a separate graph, with the MTF graph showing either the average, or the better, of sagittal and tangential readings.

I-Liang Siu uses an astigmatism graph as a complement to his MTF graph.

Lasse Beyer has a similar concept, but using range lines rather than error bars.

Shea Hagstrom‘s entry concentrated on detecting bad lenses, but the astigmatism graphs he used for that purpose might be useful for data presentation.

Winston Chang‘s contribution used also used astigmatism for bad sample detection, presenting each lens as an area graph of astigmatism. I’m showing his graphs for individual copies of lenses because I think it’s impressive to see how the different copies vary in the amount of astigmatism, but it would be a simple matter to make a similar graph of average astigmatism.

Sami Tammilehto brought MTF, astigmatism, and also asymmetry through rotation (how the lens differs at 0, 45, and 90 degrees of rotation) into one set of graphs. While rotational assymmetry is one of the ways we detect truly bad lenses, it is also a good way to demonstrate sample variation, too. In this graph, the darker hues show 10lp/mm, lighter ones 20, and the lightest ones 40, which would be useful if there was significant overlap.

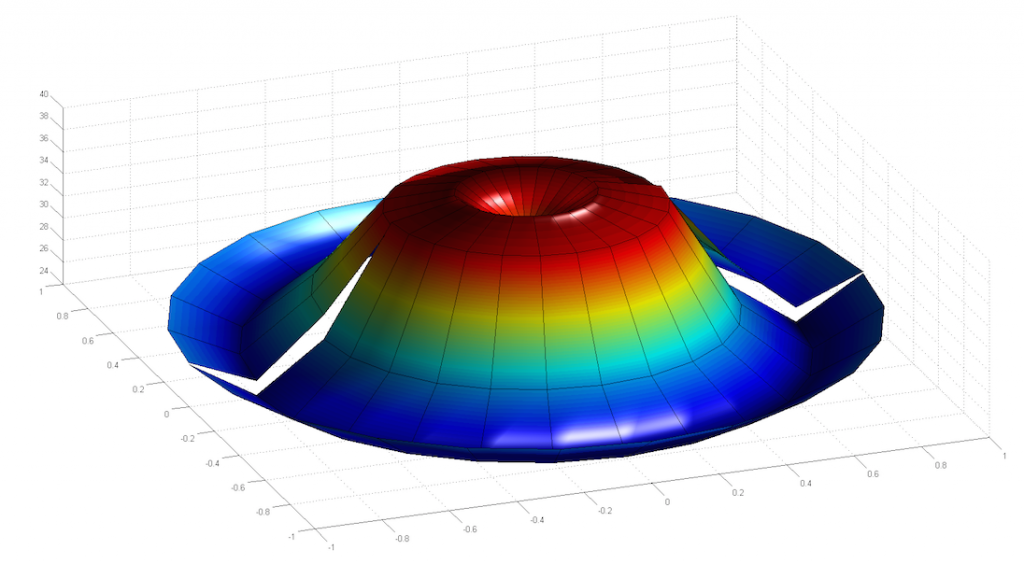

Polar and 3-D Presentations

Ben Meyer had several different suggestions, but among them was creating a 3-D polar map of MTF (note his map is incomplete because we only tested in 3 quadrants rather than 4. The fault is mine, not his.) This one is stylized, but you get the idea.

Like Ben, Chad Rockney‘s entry had a lot more to it than just data presentation, but he worked up a slick program that gives a 3-D polar presentation with a slider that lets you choose what frequency to display. Chad submitted a very complex set of program options that include ways to compare lenses. In the program, you’d click which frequency you want to display, but this automated gif shows how that would work. You can also rotate the box to look at the graph from different angles.

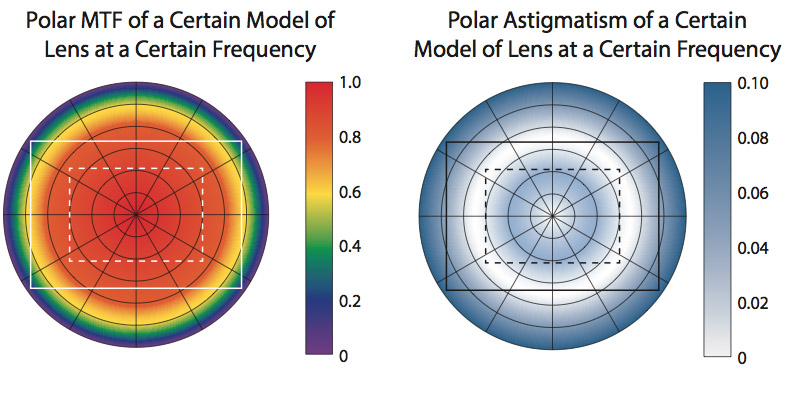

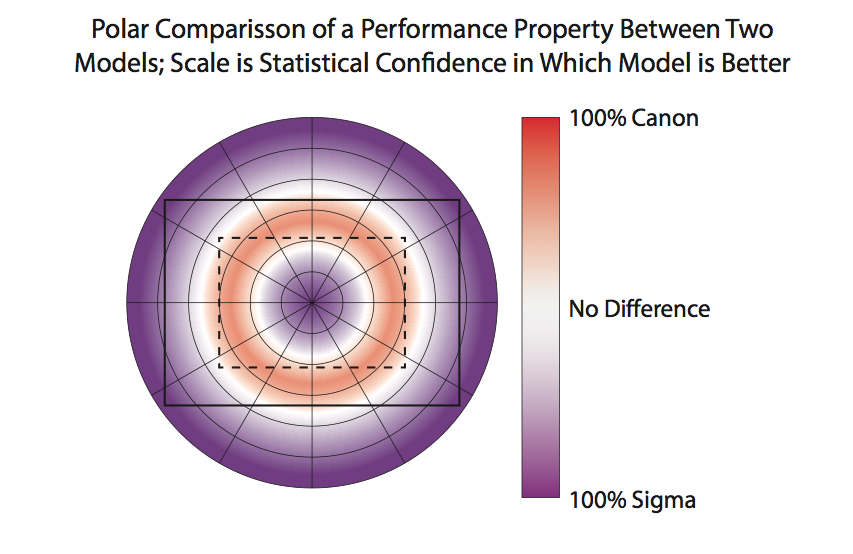

Daniel Wilson uses polar graphs for both MTF and astigmatism. It’s like looking at the front of the lens, which makes it very intuitive.

He also made a nice polar graph comparing the Canon and Sigma lenses which I think is unique and useful.

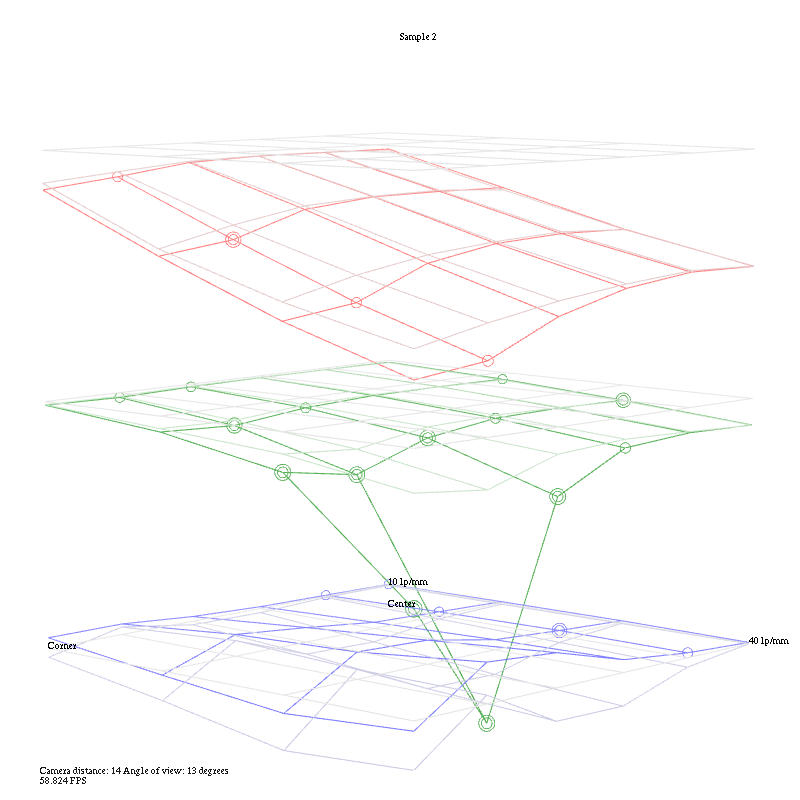

Vianney Tran made a superb app to display the data as a rotatable, selectable 3-D graph. I’ve posted a screen clip, but it loses a lot in the translation and I don’t want to link directly to his website and cause a bandwidth meltdown for him. This screen grab compares Canon and Sigma 35s at 10 lp/mm.

Walter Freeman made an app that creates 3-D wireframes. It’s geared toward detecting bad lenses and the example I used is doing just that – showing the bad copy of the Sigma 35mm compared to the average of all copies.

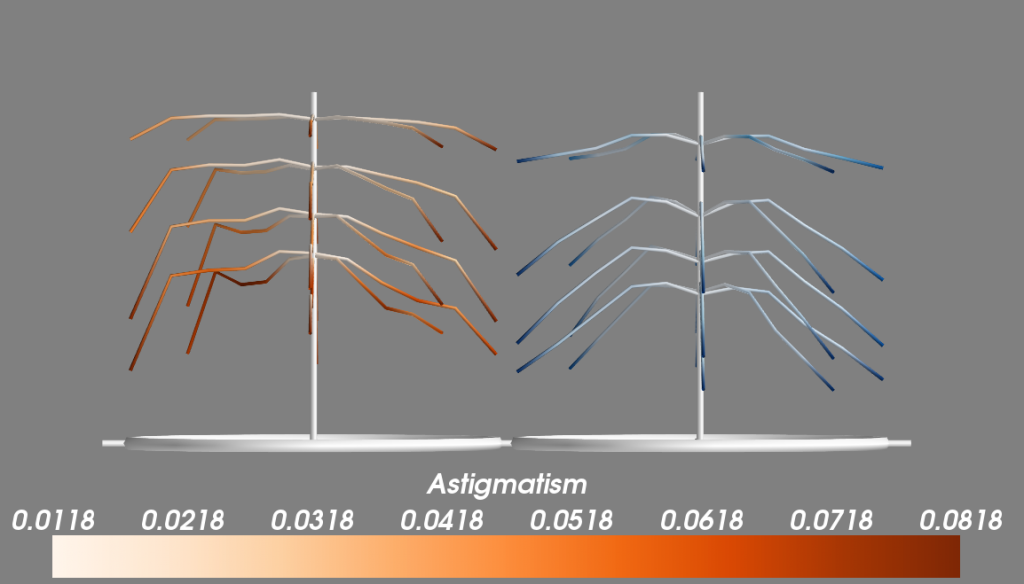

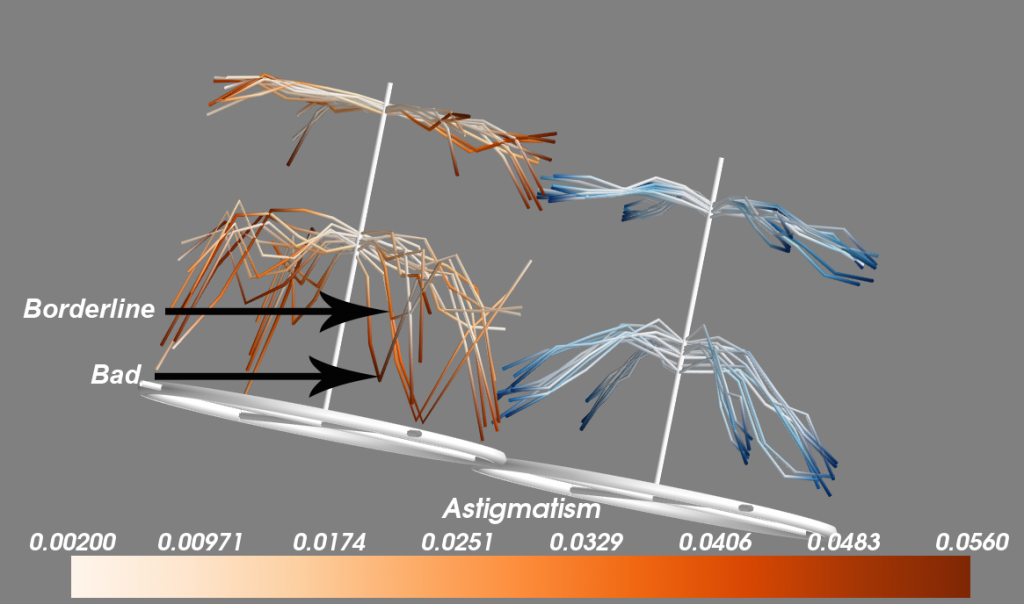

Subrashsis Niyogi came up with one of the coolest looking entries, presenting things in a way I’d never thought of. His Christmas tree branches represent the MTF at 10,20, 30, and 40 lp/mm for each lens with each branch showing one rotation. How low the branches bend represents the average MTF. The darker the color of the branch the more astigmatism is present. It’s beautiful and brilliant.

His application makes them tiltable, rotatable, the displayed lp/mm can be selected, and multiple copies can be displayed at once to pick up outliers.

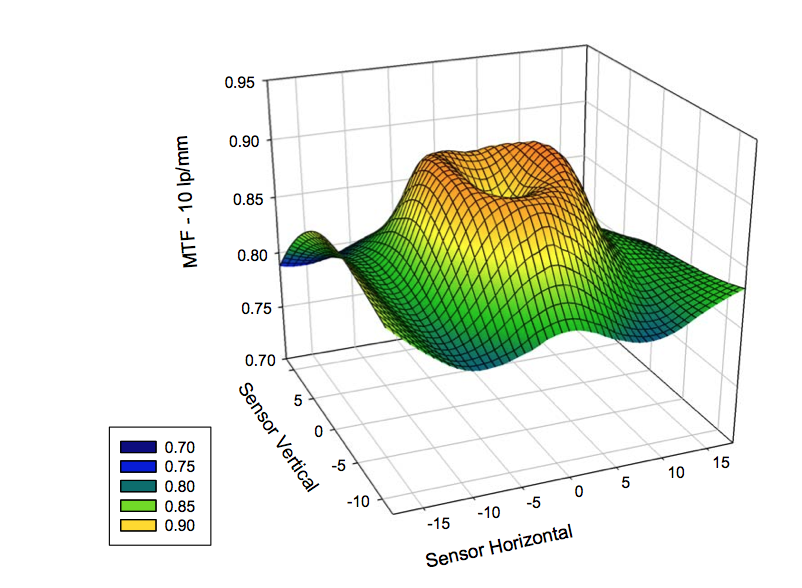

Rahul Mohapatra and Aaron Adalja put together a complete package for testing lenses to detect outliers, but also included a very slick 3-D graph for averages.

Still More Different Ways of Presenting MTF data

These graphs are really different, but that makes them interesting. I’ll let you guys decide if they also have a better data presentation factor.

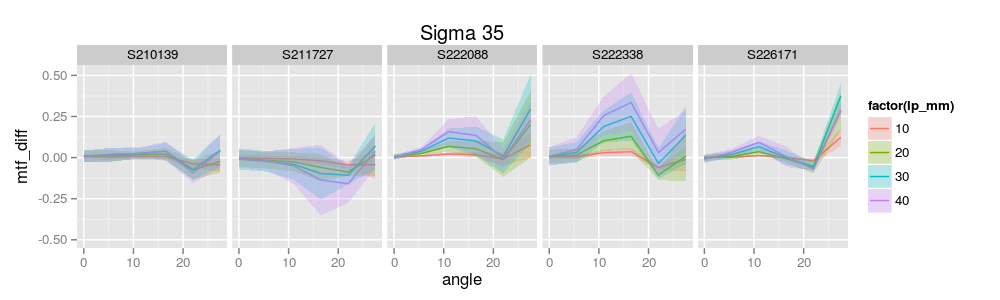

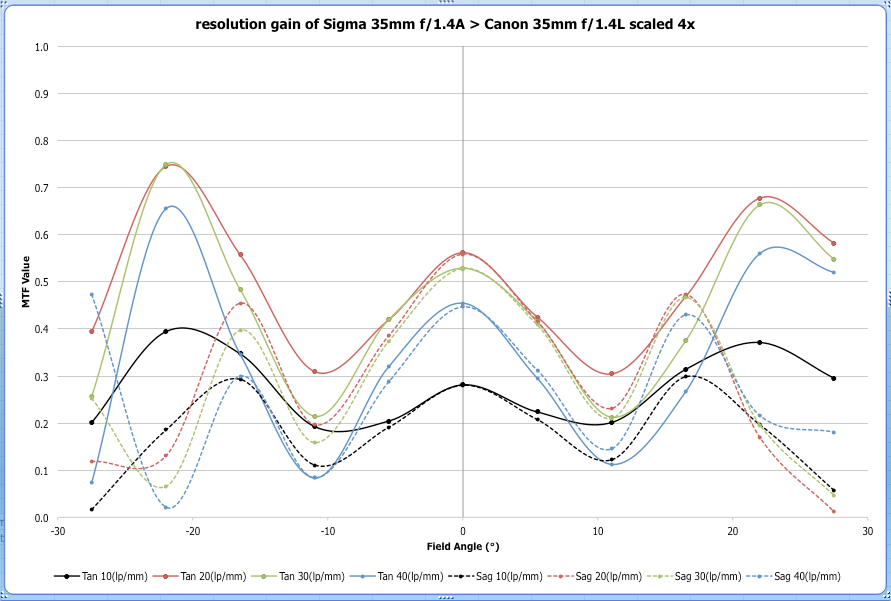

Brandon Dube went with a graph that shows the difference between lenses. In this example, all 5 Sigma lenses are plotted against the mean for all Canon lenses (represented as “0” on the horizontal axis) at each location from center to edge. This would have to be a supplemental graph, but it does a nice job of clearly saying “how much better” one lens is than another.

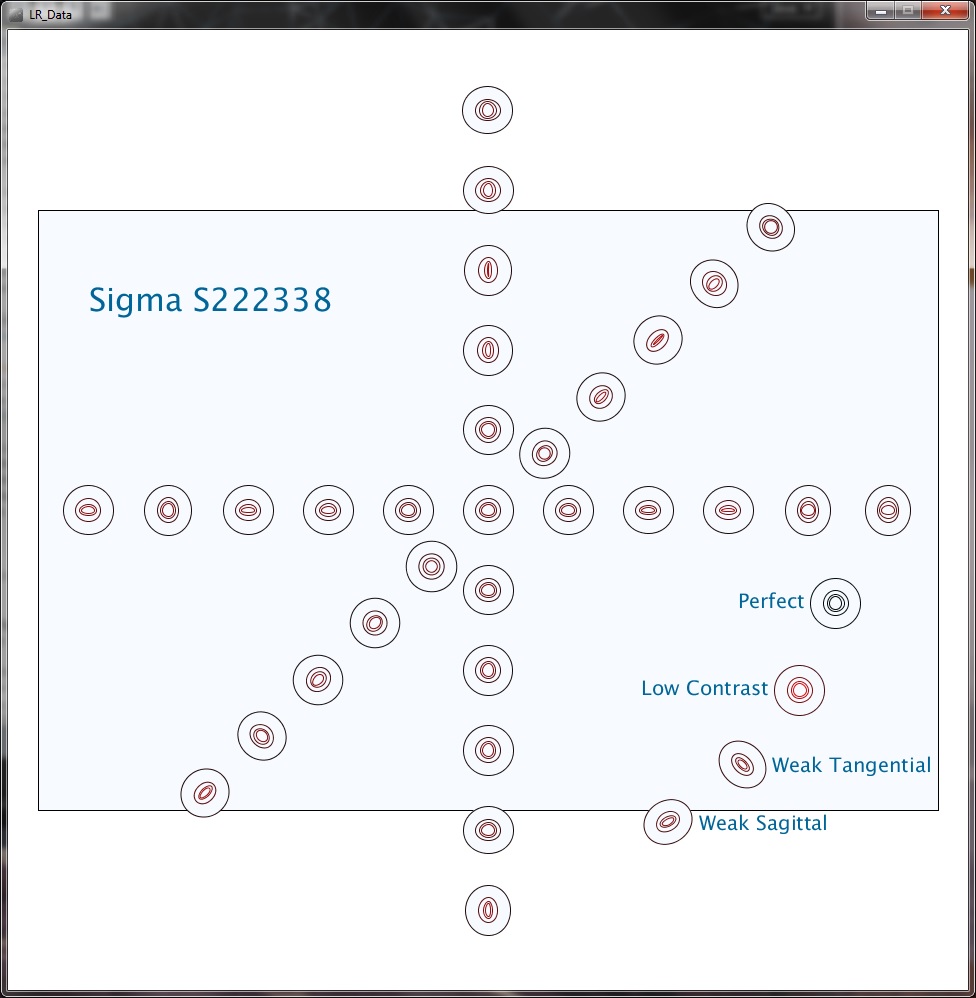

Tony Arnerich came up with something completely new to me. His graph presents the various tested points as a series of ovals on a line (each line consists of the measurements at one rotation point). More oval means more astigmatism and more color means lower MTF readings.

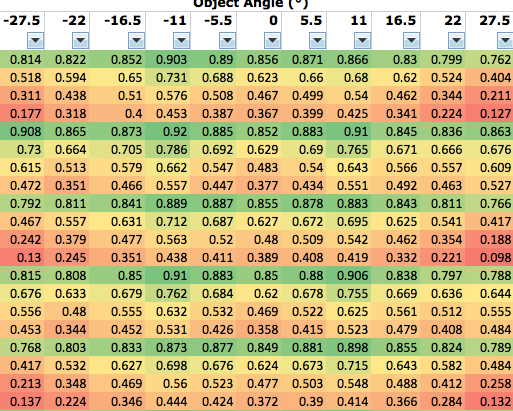

William Ries suggested a “heat map” similar to the old PopPhoto methods, giving actual numbers in a table, but using color to signify where MTF falls off.

Bronze Medals

The Bronze Medal is for people who made a suggestion for graphing methods that we will use in the blog. I’m still not sure which methods we’ll finally choose and want reader input, so we may award more Bronze Medals later. But for right now, the following people have made suggestions that I will definitely incorporate in some way, so they are Bronze Medal winners. The official Bronze Medal prize is we will test two of your lenses on our optical bench and furnish reports, but those of you who live outside the U. S. email me and we’ll figure out some other prize for you, unless you want to send your lens by international shipping.

Jesse Huebsch (I’ve already used his side-by-side comparison suggestion in my first blog post). Several people made similar suggestions, but Jesse’s was the first I received.

Sami Tammilehto triple graphs of MTF, astigmatism, and asymmetry are amazingly clear and provide a huge amount of information in a very concise manner.

Winston Chang, whose display of astigmatism as an area graph will be incorporated.

Again, Bronze Medal awards aren’t closed. There are several other very interesting contributions and I suspect the comments from readers will help me see things I’ve missed, after which I’ll give more Bronze Medals. Which, of course, aren’t really medals. Each Bronze medalist can send me two lenses to have tested on our optical bench. If they live overseas or don’t have lenses they want tested, we’ll figure out some other way to thank them – so if that fits you, send me an email.

Outlier Lens Analysis

A number of people did some amazing things to detect decentered and outlier lenses. To be blunt, we’ve been doing a pretty good job with this for years, better than most factory service centers. But after getting this input, I can absolutely say we’ll be upping our abilities significantly soon.

Nobody actually made the Platinum Prize, but a number of people came close. So instead, I split the Platinum Prize up so we could award a large number of Gold Medal prizes. Since most of the winners live outside the U. S. and can’t use the $100 Lensrentals credit given for a Gold Medal, I’ll give it in cash (well, check or Paypal, actually).

Gold Medal Winners

Gold Medal Winners had to develop a fairly simple way to create logical, easy to understand graphs that demonstrate the variation copies for each type of lens, and offer an easy way to compare different types of lenses. It turns out there were a lot of paths to Gold, because so many people taught me things I didn’t know, or even things I didn’t know were possible.

Professor Lester Gilbert. His work doesn’t generate any graphs, but the statistical analysis to detect outlier lenses is extremely powerful.

Norbert Warncke‘s outlier analysis using Proper Orthogonal Decomposition not only shows a new way to detect outliers, it does a good job of detecting if someone has transcribed data improperly.

The following win both Gold and Bronze medals.

Daniel Wilson’s polar graphs provide a great amount of information in a concise package. Several people used polar graphs, but Daniel’s implementation was really clear and included a full program in R for detecting bad copies.

Rahul Mohapatra and Aaron Adalja whose freestanding program written in R not only made the cool graph you saw above, but also does a powerful analysis for variation and bad copies.

Curran Muhlberger’s (You saw the output of his Lensplotter web app at the top of the article) programmed a method to overlay individual lens results over the average for all lenses of that type, showing both variation and bad copies.

Chad Rockney wrote a program in Python that displays the graphs shown above, it analyzes lenses against the average of that type and against other types.

Subrashsis Niyogi’s Christmas Tree graphs are amazing. While his Python-Mayavi program doesn’t mathematically detect aberrant lenses, his graphics make them stand out dramatically.

Where We Go From Here

First I want to find out what you guys want to see — which type of displays and graphics you find most helpful for blog posts. So I’m looking forward to your input.

The contest was fun and I got more out of it than I ever imagined. I want to emphasize again that the submissions are the sole property of the people who did the work (and they did a lot of work).

I’m heading on vacation for 10 days. (There won’t be any blog posts from the cruise ship, I guarantee you that.) Once I get back and get everyone’s input on what they like, I’ll contact the people who did the work and negotiate to buy their programming and/or hire them to make some modifications.

We’ve already been modifying our data collection procedures (and our optical bench to account for what we’ve learned about sensor stack thickness). Hopefully we’ll be cranking out a lot of new lens tests, complete with better statistics and better graphic presentation within a month or so.

Roger Cicala

Lensrentals.com

June, 2014

Author: Roger Cicala

I’m Roger and I am the founder of Lensrentals.com. Hailed as one of the optic nerds here, I enjoy shooting collimated light through 30X microscope objectives in my spare time. When I do take real pictures I like using something different: a Medium format, or Pentax K1, or a Sony RX1R.

-

Igor

-

Igor

-

Igor

-

Igor

-

Igor

-

Igor

-

Igor

-

Igor

-

Igor

-

Igor

-

Igor

-

Ilya Zakharevich

-

Aaron

-

Subhrashis

-

SoulNibbler

-

Subhrashis