Geek Articles

Using Rapid MTF Testing – How We Test & Monitor Our Lenses

WARNING! THIS IS A GEEK LEVEL III ARTICLE. It’s about testing, not image making. If you’re into geeky testing stuff, you’ll probably find this fascinating. If you’re not into geeky stuff but are into how lenses change over time, you’ll find skimming it worthwhile. If you’re a Fanboy or Fangirl, there’s nothing here for you; there are no brand comparisons.

About This Testing

In a previous post, I wrote about the idea of rapid MTF Testing and how we got there. The three or four people who actually read it thought it was cool. We already had arguably (really it’s not arguable, but people like to argue) the best lens testing system available. But the test time was too long to test every lens after every rental. We tested a given lens every few months using our MTF system, but day to day used test-charts and lens projectors.

Ideally, testing should be to a higher standard than the actual use. Charts and projectors were good enough back in the days of film cameras, low resolution digital, and HD Video. But today, someone making 36 megapixel, 3-D images is finding out more about the lens than a 2-D projector or chart picture test could show. (Fun fact: a good lens on a 50-megapixel camera will let you see the individual print dots that make up the lines on commonly used test charts. How do you determine ‘line pairs of resolution’ when the lines have become dots?)

The Rapid MTF system is a ‘through focus’ test, so it shows lens performance in 3 dimensions; it detects tilts and decentering better than a chart or projector can. It also gives measured numbers at multiple points so we can keep the data for every test of every lens.

For the first time, we can look at how a given copy has tested multiple times over several months. Plus, there are, you know, numbers. “It dropped from 0.76 to 0.64” has more meaning than, “I think it might be softer than last time.” Of course, there’s no way one of our techs, testing 200 lenses a day, would possibly remember what it looked like last time.

After a year of development and a few months of beta testing, we knew that the new testing method worked. But we weren’t sure how much better it would be, or what other information it might give us. We’ve been using it for months now, tested thousands of lenses multiple times, and learned a lot.

Some of what we learned is about how much this improved our testing. Some of what we learned is about better ways to identify lenses that can be improved by adjustment in-house. Of course, like all things, some of what we learned goes under the ‘how could we be so stupid’ category. So this post is a combination of celebrating how great our new testing is and laughing at stupid Roger tricks.

Plus, like most of the stuff we do, we learned some things about the lenses that you may find interesting.

Looking at Data

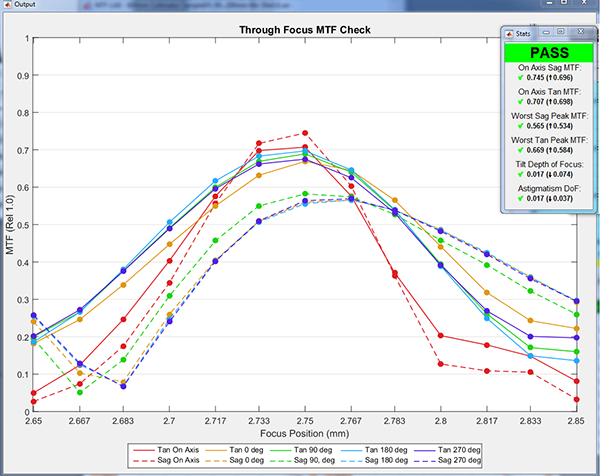

Let’s start with a single test of a single lens. The computer graphs the test results and then checks six measurements to decide if the lens passes or fails. If the lens passes all six requirements it passes, as this one did.

Lensrentals.com, 2018

What are those six key measurements, you should ask? Basically, two are center sharpness (on axis Sag and Tan MTF), two are the worst corner being good enough (worst of the four Sag and Tan Peaks). Next is tilt (the horizontal axis is focus position; if the lens is tilted the four off-axis peaks don’t line up like the lens above; they are spread apart) and finally, astigmatism (if the sagittal and tangential have different focus positions).

Every lens is different, so how does the computer decide what’s passing and failing for a given lens? We start by using the MTF and variance data that we’ve published in blog posts for years and plug that in the program. But the program is self-learning. After it’s done hundreds of runs on a given lens, we tell it to recalculate its standards. With several lenses, after we ran a couple of hundred tests, the machine told us our standards were too low.

The opposite has happened a couple of times, too. Our initial standards were too high. When 14 of the first 16 lenses fail, we have to reassess standards. There are also very few lenses where ‘normal’ is nowhere near perfect. A couple of lenses, for example, have tilt in every single copy we’ve ever test (several dozen copies at least), so we have to override the program and tell it ‘do not fail for tilt.’

Results Over Time

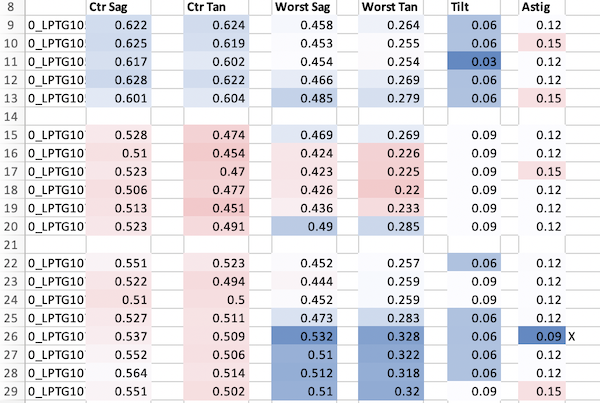

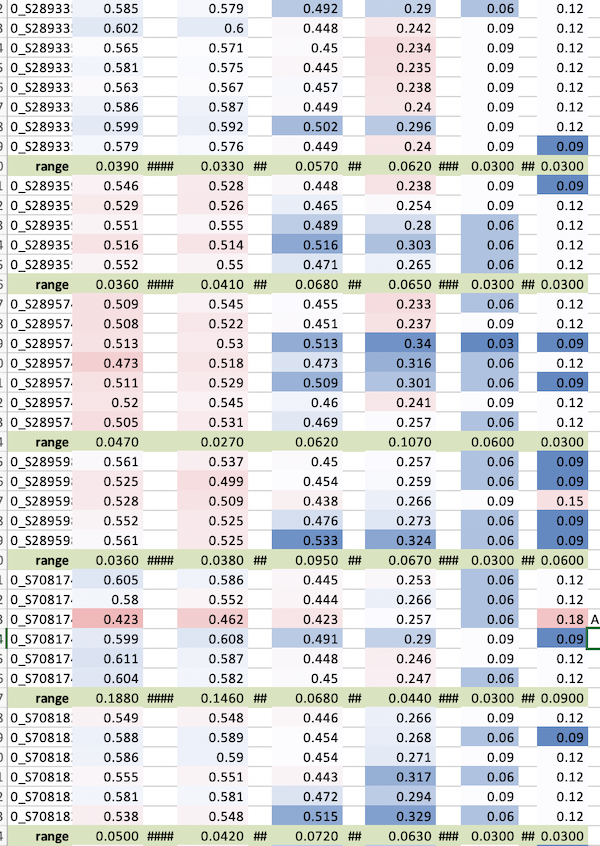

One question I get asked a lot is ‘do lenses get softer with use?’ This type of testing lets us save the numerical results every time a lens is tested so we can look at that. An excel spreadsheet of all the test results for a given lens is really interesting. Here’s a tiny clip from a 1,000 plus row spreadsheet. This sheet was for Canon 50mm f1.2 results; I chose it because that’s a lens that requires frequent adjustment.

Lensrentals.com, 2018Rap

The empty rows separate three different copies of the lens; each lens tested after each rental. I did a crude conditional format for each column with dark blue being the best, dark red (there aren’t any here) being near-failing or failing. You can see the tendency that we see: a given lens tends to be similar with each repeated test.

It’s generally consistent enough so that any change stands out. I can then pull up that lens and see if anything happened to it. You’ve probably noticed the last lens in the table above had a change; it actually improved somewhat in its ‘worst corner’ readings. There’s an X in the column at the time of change. The X tells me one of our techs opened that lens up to remove dust before sending it for testing. Cleaning the dust out doesn’t improve the lens optically. However, with this lens, the elements have to be recentered after dusting the front. Our tech recentered it better than it came from the factory.

I’m going to show you a longer piece of the spreadsheet now, and let you scroll down it like I do when I review reports (except mine is over 1,000 rows long). Mostly this is to give you an illustration that a given copy doesn’t change very much over time. Granted this is only a few months, but each test is after the lens was shipped, used, and shipped back. You’ll have to take my word that if I showed you all the spreadsheets for hundreds of lenses over thousands of test runs, this is how they all look.

Lensrentals.com, 2018

You may notice one other ‘event’ in the ones above. The lens under our first example has a change, and there’s an “A” for ‘adjusted optically,’ after that test. The point isn’t that the adjustment made it better (it did), but rather the lens was tested four times and passed, then failed with about the same results. This is the ‘tightening of range’ that I mentioned earlier. Our program showed us we should hold this lens to higher standards than we were.

More Problems

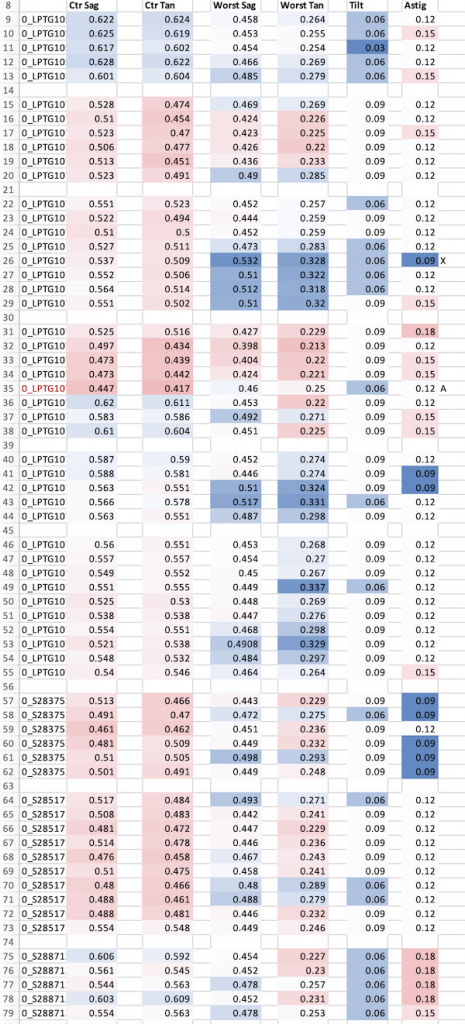

This next clip is from a different lens. This time I just pulled the problem lenses we found (well, and one problem we created).

Lensrentals.com, 2018

Too Good to Be True

We’ll start with the top because it’s one that made me go ‘DUH, we should have thought of that.’ That lens didn’t suddenly get better for one test run; the tech didn’t check to make sure the aperture was wide open before testing it. Once would have been funny, but it’s happened a dozen times (out of a few thousand test runs), so Markus is now adding a ‘too good to be true’ outcome to the software. We should have thought of this to start with. It’s important because testing a lens stopped down basically is not testing the lens. Some lenses that are awful wide open look fine stopped down a bit.

There was even more ‘we should have realized that’ usefulness in these ‘too good to be true’ test results. We found a high frequency of ‘stopped down’ results in one Cine lens. It turns out that the tech wasn’t at fault, the aperture wasn’t fully opening to maximum diameter when the aperture ring said it was. (It wasn’t a huge difference, for example, a T1.5 was probably set at T1.7 or t1.8 in reality.) It’s an easy adjustment to make, but we had been missing it before we started this kind of testing.

The Drop Kick, the Duster, and

The second lens in the set above is really the perfect example of why testing is important. After the test, we emailed the renter and asked if the lens had been dropped. He told us it had but that it had worked fine afterward, so he hadn’t mentioned it. We found a bent focusing cam inside and had to send it off to the service center.

The next one still passes (although barely) but is definitely worse since it was dusted. We pulled it off the shelf to readjust it after we saw this. It still passes, so just testing on a pass-fail basis we didn’t notice it. But looking at serial tests, we know it can be made better than it is because it used to be better than it is.

The last lens is another example of randomness: it didn’t fail its first optical test but was having AF problems, and one of our techs restacked the AF motor. It’s optically different after that; a bit sharper in the center but with more astigmatism at the edges. Again, it still passes, but disassembling and reassembling made it a bit different.

Another Look at Variation

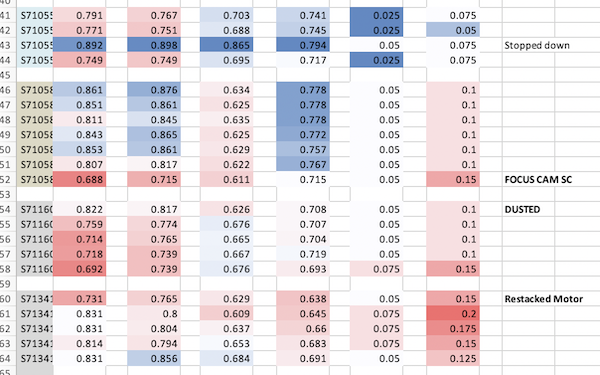

We also have another redundant check that is a benefit of being able to store data for multiple tests. The example below is from yet a different lens. In this spreadsheet, we simply added high-to-low range for each lens (green cells). Remember, the first two columns are a center resolution, the next two weakest corner resolution, then tilt and astigmatism. This lens doesn’t have much tilt or astigmatism so we’ll ignore those.

Lensrentals.com, 2018

One lens, the second from the bottom, did have a failed result requiring optical adjustment. If you look at the ranges, you’ll see they’re very consistent among all the other lenses, basically less than 0.05 in the center and 0.07 in the worst corner. But the bad results gives a much higher range.

While that wasn’t necessary to identify the bad lens, it can help us identify bad test runs, copies that might have a little looseness inside and vary from test-to-test, or a type of lens that just has a lot more variation, which helps us set our standards (more on that in a bit).

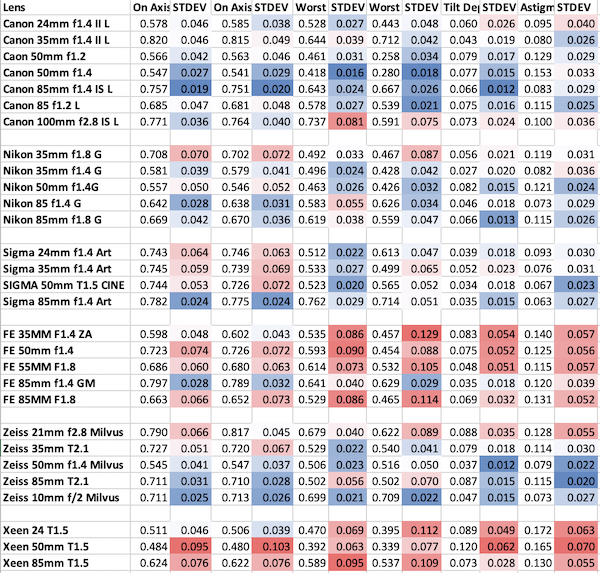

We can take this a little further in summary reports. For right now, I have to import these by hand, so I’m only showing a few examples. In this sheet, I’ve highlighted the standard deviations for each test on each type of lens, and again colored them by percentiles, with red having the highest variation and blue the lowest. So where you see red, the lens has a lot of copy-to-copy variation, where you see blue, it has little.

Please don’t read too much into this; it’s just an interesting demonstration. I’ll be getting into accurate detail in future posts; this is just a preliminary overview. Remember, I have way more data on Canon lenses (600 to 1,000 tests per lens) because we have way more of them. Most of the others have only 100-300 test runs, their numbers may change a bit as more data comes in.

Lensrentals.com, 2018

The points I’m trying to make aren’t which brands and lenses are best and worst (I’ll make that later). It’s simply to make the point that certain lenses, and to a lesser extent certain brands, have more variation than others. All of our tests simply make certain that the lens passes what its own standards are. If it’s variation is high out of the box, the variation is high.

As one example, I’ll show how this confirms something I’ve said for years. The Sony 35mm f1.4 ZA lens, is very consistent for center sharpness. If you get one, center sharpness will be good. The low variation our test shows in center sharpness confirms that. All of the 35mm f/1.4s seem to have a weak corner or side, though, and the red off-axis readings demonstrate that. If you get one, the corners are not likely to be equal. This is similar to what our variation graphs show when we do MTF testing, but it’s easier to compare a lot of lenses this way.

Summary

I guess my takeaway message here is several-fold. First, having historical data on a lens lets us do more than just ‘pass-fail’ testing. It can give feedback to techs about their repairs. We’re learning that with some lenses, at least, we can adjust optically to better than ‘good enough to pass.’ A year ago I was comfortable we had the best optical testing that anyone had. Now it’s better.

Probably the most pertinent point for you guys is that lenses don’t change over time very much unless something happens. They don’t change much with use, but they can change with abuse, even accidental abuse. If a lens gets dropped (even if it seems fine afterward) or repaired, it may well change optically.

Another point that I’ll expand on in a future post is for those who spend a lot of time and effort in ‘getting a great copy.’ Some lenses have such variation that you probably will have to go through at least dozens of copies if your definition of ‘great copy’ is ‘near perfect copy.’ If you limit your definition to something like ‘good in the center, I can live with bad corners’ or ‘I don’t mind some tilt’ then you really can find some great bargains.

Roger Cicala, Aaron Closz, and Markus Rothacker

Lensrentals.com

November, 2018

Author: Roger Cicala

I’m Roger and I am the founder of Lensrentals.com. Hailed as one of the optic nerds here, I enjoy shooting collimated light through 30X microscope objectives in my spare time. When I do take real pictures I like using something different: a Medium format, or Pentax K1, or a Sony RX1R.

-

Larry Templeton

-

Larry Templeton

-

Larry Templeton

-

Carmen B. Rodriguez

-

Cassandra Cain

-

Zak McKracken

-

RLThomas

-

eighthday42

-

Carleton Foxx

-

taildraggin

-

Roger Cicala

-

Roger Cicala

-

Oppen

-

Patrick Chase

-

Abraham Friedman

-

obican

-

Ilya Zakharevich

-

Ilya Zakharevich

-

Roger Cicala