Lenses and Optics

Sigma 24-70mm f/2.8 DG OS HSM Art Sharpness Tests

Sigma has been on an incredible run these last 5 years, releasing one amazingly sharp lens after another. They’ve made lenses no one has ever tried before and not only succeeded, they made them amazingly good on the first try. Their quality control has become as good as anyone’s, better than most. And their repair service has become one of the best out there.

Like many of you, we’ve waited for the Sigma 24-70 f/2.8 Art lens for quite a while. It would have image stabilization, it would be less expensive than the brand name alternatives, and it would be sharp as heck, because it was a Sigma Art.

I’ll save those of you who hate to read the trouble of reading. Even Babe Ruth hit singles sometimes. It had to happen. Sigma has made lens after lens that exceeded everyone’s wildest expectations. Sooner or later they were going to make one that didn’t. This isn’t a bad lens, but we’ve come to expect amazing things from Sigma Art lenses and this lens is not amazing.

As always, these are the results of 10 tested copies; each tested at four rotations. For those who don’t speak MTF, the easy version is higher is better, and dotted and solid lines of the same color close together are better. And as always this is an MTF test, not a review. I’m still not sure I can pronounce bokeh, much less describe it to you.

MTF Results

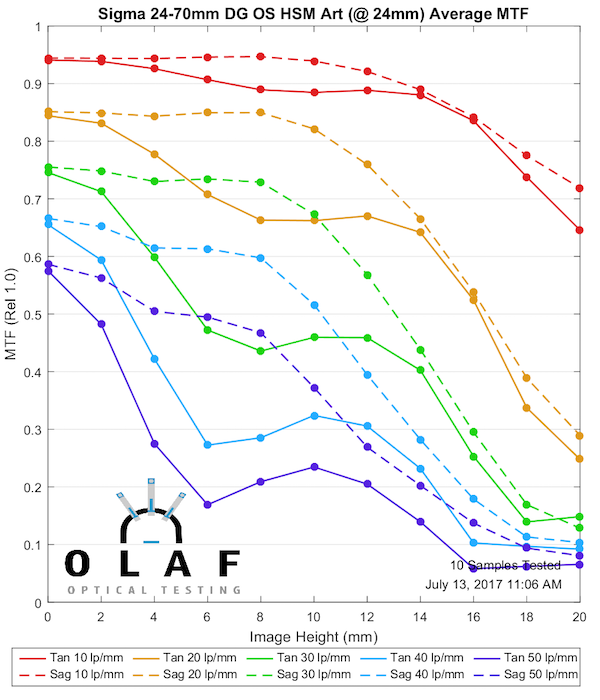

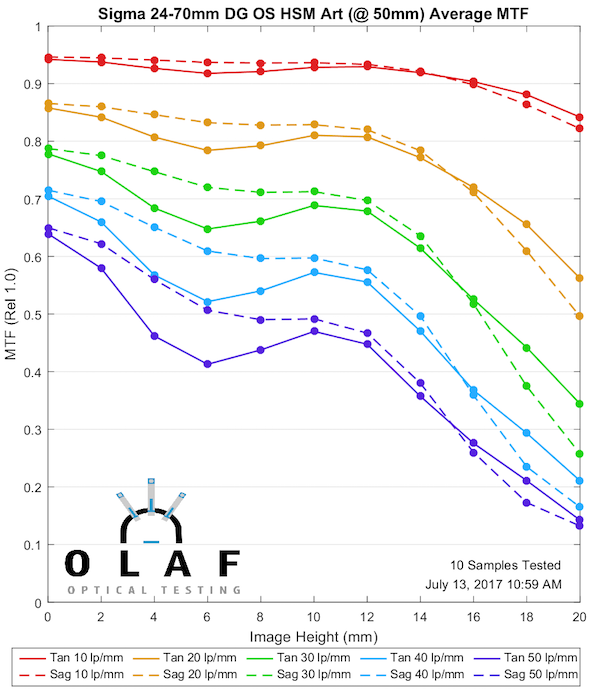

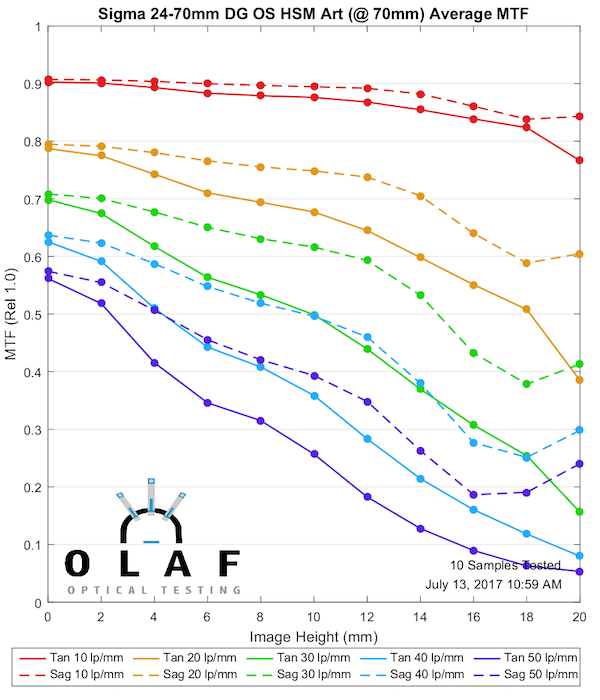

We’ll look at the results at 3 focal lengths; 24mm, 50mm, and 70mm. We expect most 24-70mm zooms to perform best at 24mm and be weakest at 70mm. The Sigma is actually a bit different, having its best performance at 50mm.

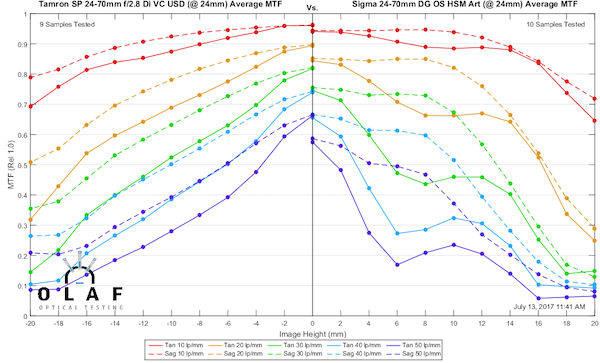

24mm

One thing to note at 24mm is the bulge of astigmatism-like separation in the middle of the field, from 4mm to 12mm or so off-axis. I’m not sure what this will look like in photographs, but it might be, well, different. Or maybe not noticeable. I’ll be interested to see.

Olaf Optical Testing, 2017

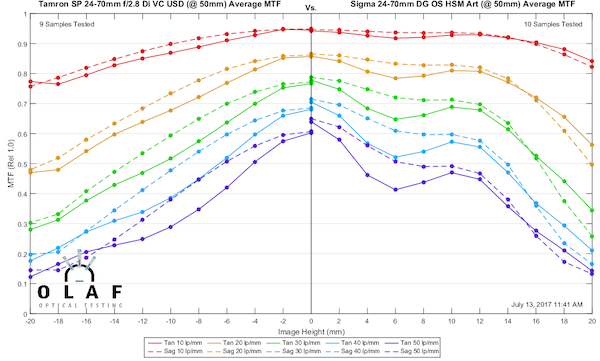

50mm

Things sharpen up nicely and the curves become much smoother and regular. I expect 50mm is not only the sharpest zoom range, but probably has the best out-of-focus appearance, too.

Olaf Optical Testing, 2017

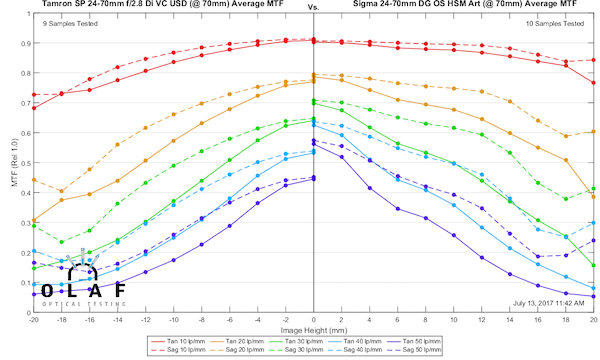

70mm

Resolution drops off at 70mm, but the curves stay smooth good away from center.

Olaf Optical Testing, 2017

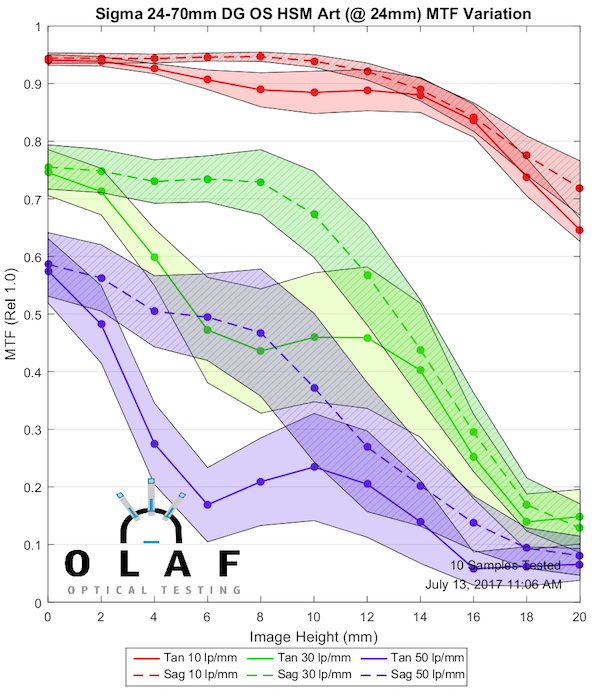

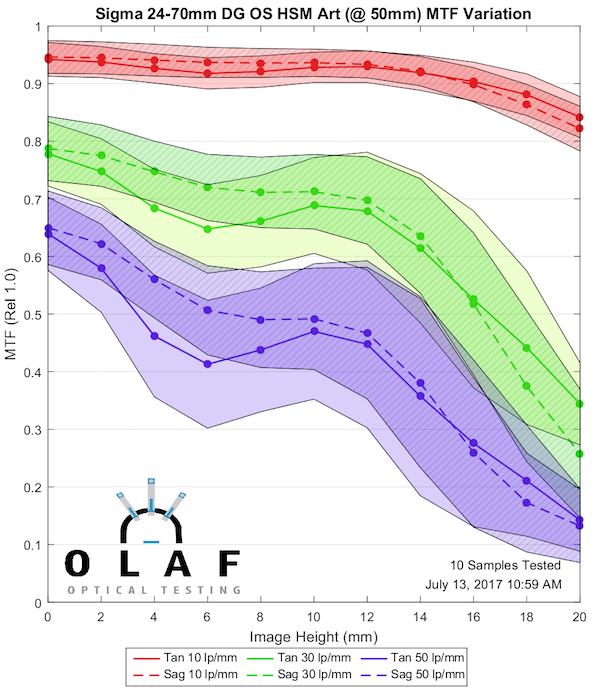

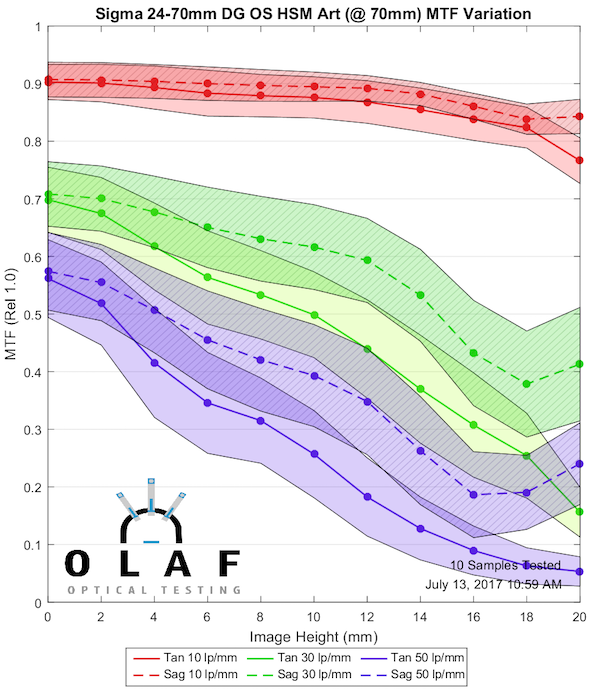

Copy-to-Copy Variation

I can’t say this was great, honestly. At 24mm we have a nice, tight range but things get a bit random at both 50mm and 70mm. Overall I’d call this better than average at 24mm and a little below average at 50mm and 70mm.

24mm

Olaf Optical Testing, 2017

50mm

Olaf Optical Testing, 2017

70mm

Olaf Optical Testing, 2017

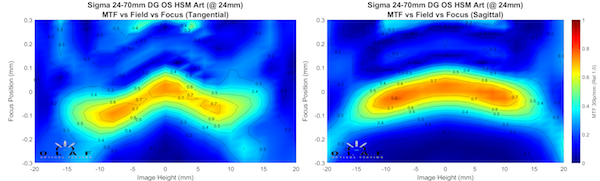

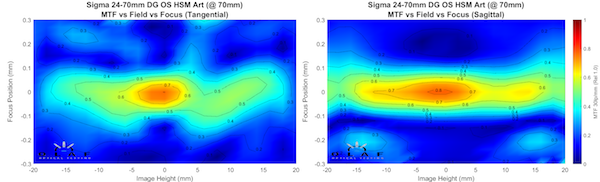

Field of Focus Curvature

Please don’t mistake this for distortion measurements, which someone did a couple of weeks ago.

24mm

There’s a gentle curve at 24mm in the sagittal field, with the tangential field curving more severely.

Olaf Optical Testing, 2017

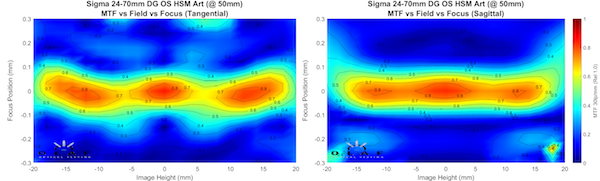

50mm

At 50mm the sagittal field is perfectly flat with the tangential field reversing curvature into a mild mustache pattern.

Olaf Optical Testing, 2017

70mm

At 70mm the sagittal field remains flat. The tangential field, well, we had the expectations setting a little high on our bench and the curve really didn’t resolve well enough for us to clearly tell about the tangential field. Maybe a mustache. Maybe who cares.

Olaf Optical Testing, 2017

Comparisons

Well, the charts are nice and all, but it’s always good to have comparisons. I’ve carefully selected the ones I think are appropriate and avoided the ones you wanted to see. It’s not that I’m purposely cruel, wait, yes it is.

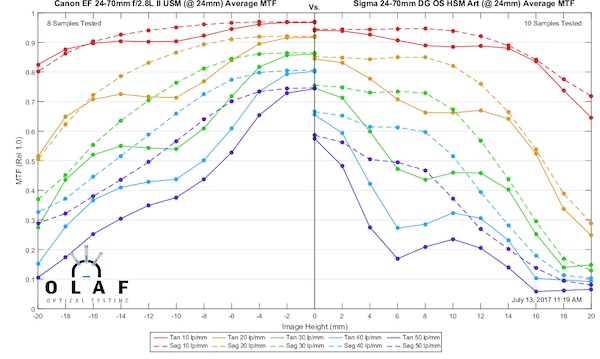

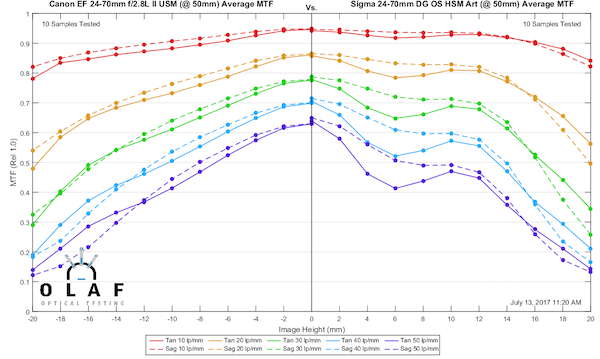

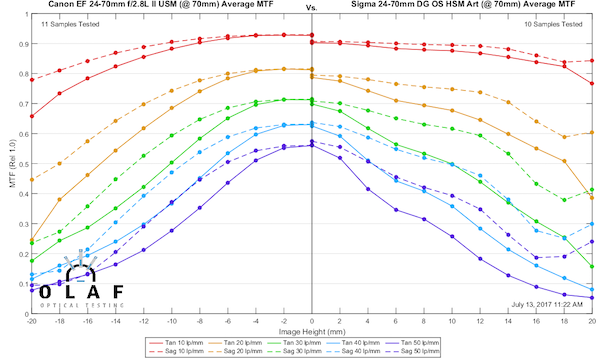

Sigma 24-70mm f/2.8 Art vs Canon 24-70mm f/2.8 Mk II

The Canon 24-70 f/2.8 L Mk II is about as good as it gets for zooms in this range.

24mm

The Canon is at its best at 24mm and the Sigma gets pretty beaten up here.

Olaf Optical Testing, 2017

50mm

At 50mm the story is a little different. The Sigma is at its best at 50mm and the Canon has dropped off a bit. In the center things are completely even. The Canon is just a little bit better in the middle of the field. So if you want to compare your new Sigma Art to your buddies Canon, try to do it at 50mm.

Olaf Optical Testing, 2017

70mm

Both lenses have fallen off a bit at 70mm. The Canon is a little better here but not as dramatically better as it was at 24mm.

Olaf Optical Testing, 2017

Sigma 24-70mm f/2.8 Art vs Tamron 24-70mm Di VC

This is probably a more reasonable comparison; the two image-stabilized third-party zooms. The Tamron G2 version will be out soon and is expected to be better, but we don’t have MTF tests on it. Because soon is not the same as now.

24mm

At 24mm, the Tamron is clearly a bit better.

Olaf Optical Testing, 2017

50mm

The Sigma again shows it is at its best at 50mm, and particularly away from center, it is a little better than the Tamron.

Olaf Optical Testing, 2017

70mm

At 70mm the Sigma is better than the Tamron, which is clearly weakest at 70mm.

Olaf Optical Testing, 2017

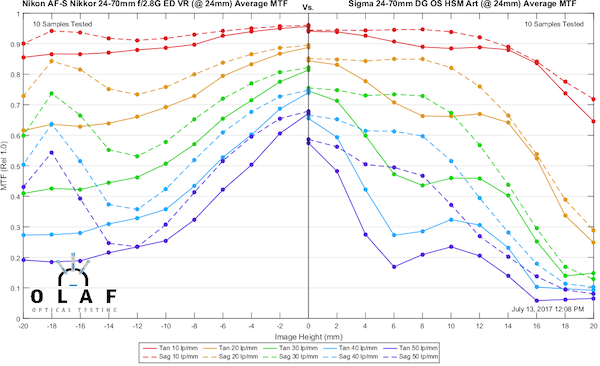

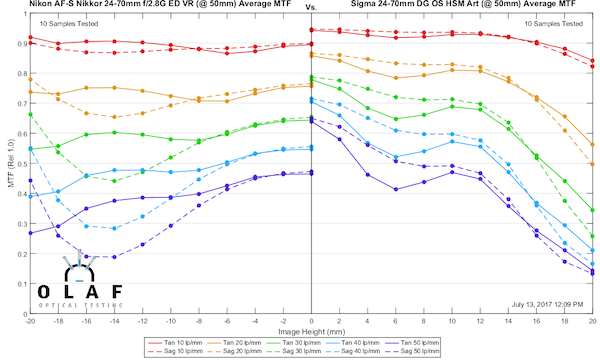

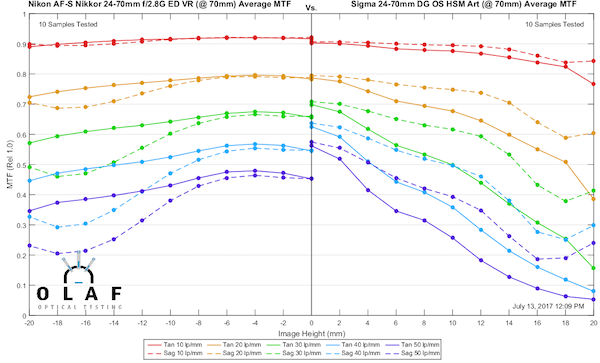

Sigma 24-70mm f/2.8 Art vs Nikon AF-S 24-70mm f/2.8 ED VR

Nikon has a different emphasis in their 24-70, giving up some center sharpness in exchange for good sharpness across the entire field.

24mm

The pattern is familiar, at 24mm the Sigma just isn’t as good.

Olaf Optical Testing, 2017

50mm

AT 50mm, though, the Sigma is clearly sharper in most of the frame. This is the weak focal length for the Nikon and the strongest range for the Sigma. In the outer 1/3, though, the Nikon is a little sharper.

Olaf Optical Testing, 2017

70mm

At 70mm the Sigma has better sharpness at the higher frequencies, the Nikon is a smoother away from center.

Olaf Optical Testing, 2017

Conclusion

I’ll admit I’ve been a bit of a Sigma Fanboy lately. The only thing better than aggressively trying new things is aggressively trying new things and making them awesome and that’s what Sigma has been doing. But I’m not a big fan of this lens. This is an adequate lens, but nothing more than that.

I’d probably feel better about it if it didn’t have ‘Art’ on the label. I’ve come to recognize Sigma Art to mean ‘as good or better than any other lens in that focal length, even when the others cost way more.’ This lens I would describe as adequate overall. It’s weak at 24mm and good (but not awesome) at the longer parts of the zoom range.

If it didn’t say Art on the side and cost a few hundred dollars less, I’d probably be less disappointed. If I was being snarky, I’d say they left the “F” off of Art on this one. But I’m trying to be less snarky these days so I won’t say that. Or at least won’t say it again.

The Sigma 24-70mm f/2.8 Art Series‘ better performance at the long end may appeal to people that already have 24mm covered with a good wide-angle lens. If you use your 24-70 f/2.8 mostly at 50 and 70mm then the weakness at 24mm may not bother you much.

I think most people considering this lens are going to wait to evaluate the Tamron G2. If the Sigma price falls significantly it may be a more attractive option, but right now I can’t see a strong reason to make it your 24-70 choice. It’s not a bad lens, just not an Art lens, really.

Roger Cicala and Aaron Closz, with the invaluable assistance of hard-working intern Anthony Young

Lensrentals.com

July, 2017

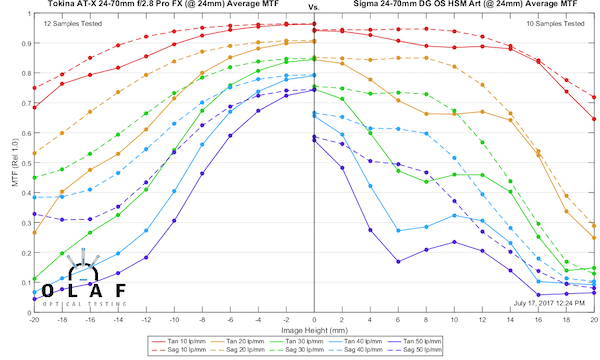

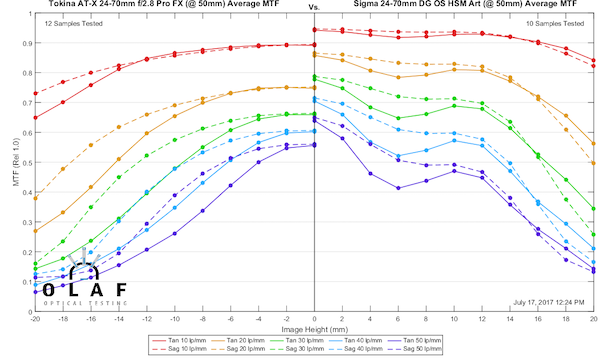

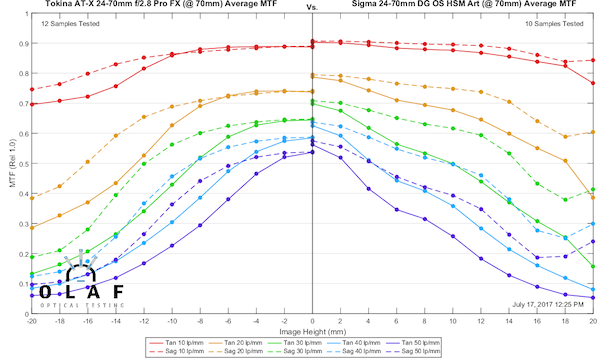

Addendum: As requested, comparison to the Tokina 24-70 f/2.8

24mm

Olaf Optical Testing, 2017

50mm

Olaf Optical Testing, 2017

70mm

Olaf Optical testing, 2017

Author: Roger Cicala

I’m Roger and I am the founder of Lensrentals.com. Hailed as one of the optic nerds here, I enjoy shooting collimated light through 30X microscope objectives in my spare time. When I do take real pictures I like using something different: a Medium format, or Pentax K1, or a Sony RX1R.