Lenses and Optics

Notes on Lens and Camera Variation

A funny thing happened when I opened Lensrentals and started getting 6 or 10 copies of each lens: I found out they weren’t all the same. Not quite. And each of those copies behaved a bit different on different cameras. I wrote a couple of articles about this: This Lens is Soft and Other Myths talked about the fact that autofocus microadjustment would eliminate a lot, but not all, of the camera-to-camera variation for a give lens. This Lens is Soft and Other Facts talked about the inevitable variation in mass producing any product including cameras and lenses: that there must be some real difference between any two copies of the same lens or camera.

A lot of experienced photographers and reviewers noted the same things and while we all talked about it, it was difficult to use words and descriptions to demonstrate the issue.

And Then Came Imatest

We’ve always had a staff of excellent technicians that optically test every camera and lens between every rental. But optical testing has limitations: it’s done by humans and involves judgement calls. So after we moved and had sufficient room, I spent a couple of months investigating, buying, and setting up a computerized system to allow us to test more accurately. We decided the Imatest package best met our needs and I’ve spent most of the last two months setting up and calibrating our system (Thank you to the folks at Imatest and SLRGear.com for their invaluable help).

It has already proven successful for us, as it is more sensitive and reproducible than human inspection. We now find some lenses that aren’t quite right, but that were perhaps close enough to slip past optical inspection. Plus the computer doesn’t get headaches and eyestrain from looking at images for 8 to 10 hours a day.

Computerized testing has also give me an opportunity to demonstrate the amount of variation between different copies of lenses and cameras. We have dozens (in in some cases dozens of dozens) of copies of each lens and camera. While we don’t perform the multiple, critically exact measurements that a lens reviewer does on a single copy, performing the basic tests we do on multiple copies demonstrates variation pretty well.

Lens-to-Lens Variation

We know from experience that if we mount multiple copies of a given lens on one camera, each one is a bit different. One lens may front focus a bit, another back focus. One may seem a bit sharper close up, another is a bit sharper at infinity. But most are perfectly acceptable (meaning the variation between different copies is a lot smaller than the variation you’re likely to detect in a print). I can tell you that, but showing you is more effective.

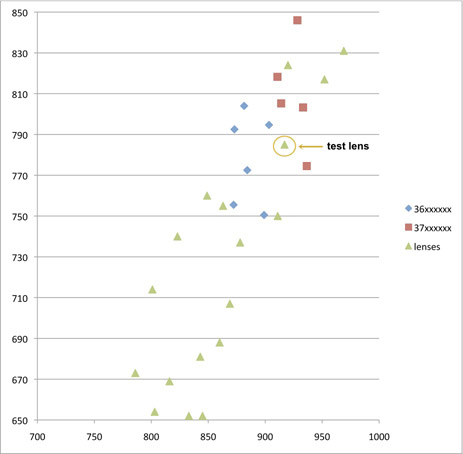

Here’s a good illustration, a run of 3 different 100mm lenses, all of which are known to be quite sharp: the original Canon 100mm f/2.8 Macro, the newer Canon 100mm f/2.8 IS L Macro, and the Zeiss ZE 100mm Makro. The charts shows the highest resolution (at the center of the lens) across the horizontal axis, and the weighted average resolution of the entire lens on the vertical axis, measured in line pairs / image height. All were taken on the same camera body and the best of several measurements for each lens copy is the one graphed.

It’s pretty obvious from the image there is variation among the different copies of each lens type. I chose this focal length because there was a bad lens in this group, so you can see how different a bad lens looks compared to the normal variation of good lenses. As an aside, the bad lens didn’t look nearly as bad as you would think: if I posted a small JPG taken with it, you couldn’t tell the difference between it and the others. Blown up to 50% in Photoshop, though, the difference was readily apparent.

My point, though, is while the Canon 100mm f/2.8 IS L lens is a bit sharper than the other two on average, not every copy is. If someone was doing a careful comparative review there’s a fair chance they could get a copy that wasn’t any sharper than the other two lenses. I think this explains why two careful reviewers may have slightly different opinions on a given lens. (Not, as I see all too often claimed on various forums, because one of them is being paid by one company or another. Every reviewer I know is meticulously honest.)

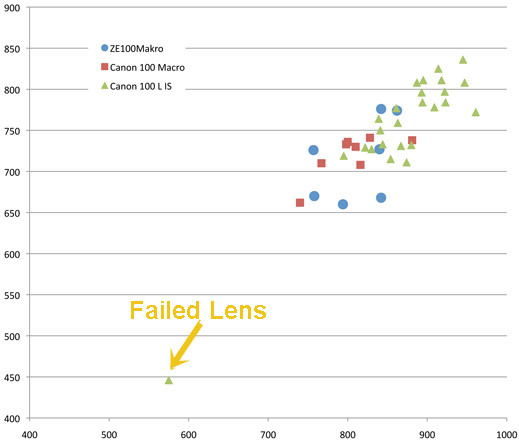

Autofocus Variation

We all know camera autofocus isn’t quite as exact as we wish. (Personally, after investigating how autofocus works for this article, I’m amazed that it’s as good as it is, but I still complain about it as much as you do.) But when I started setting up our testing, I was hoping we could use autofocus to at least screen lenses initially. The results were rather interesting. Below is the same type of graph for a set of Canon 85mm f/1.8 lenses I tested using autofocus. Notice I again included a bad copy as a control.

(For those of you who are out there thinking “I want one of those top 3 copies, not one of the other ones”, and I know some of you are, keep reading.)

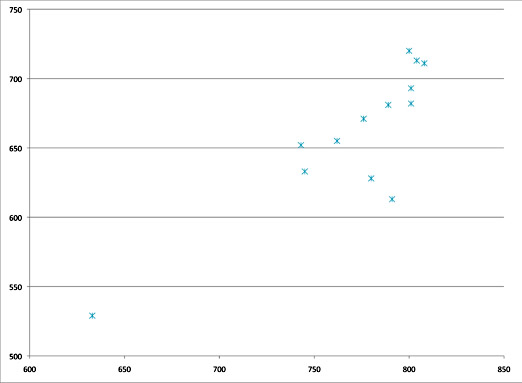

Then I selected one copy that had average results (Copy 7), mounted it to the test camera, and took 12 consecutive autofocus shots with it. Between each shot I’d either manually turn the focus ring to one extreme or the other, or turn the camera off and on, but nothing else was moved. (By the way, for testing the camera is rigidly mounted to a tripod head, mirror lock up used, etc.)

In the graph below, overlaid on the original graph, the dark blue diamond shapes are the 12 autofocus results from one lens on one camera. Then I took 6 more shots, using live view 10x manual focus instead of autofocus, again spinning the focus dial between each shot. The MF shots are the green diamonds. I should also mention that when I take multiple shots without refocusing the results are nearly identical – that would be a dozen blue triangles all touching each other. What you’re seeing is not a variation in the testing setup, it’s variation in the focus.

It’s pretty obvious that the spread of sharpness of one lens focused many times is pretty similar to the spread of sharpness of all the different copies tested once each. It’s also obvious that live view manual focus was more accurate and reproducible than autofocus. Of course, that’s with 10X live view, a still target, and a nice star chart to focus on and all the time in the world to focus correctly. No surprise there, we’ve always known live view focusing was more accurate than autofocus.

One aside on the autofocus topic: Because it would be much quicker for testing, I tried the manual versus autofocus comparison on a number of lenses. I won’t bore you with 10 more charts but what I found was that older lens designs (like the 85 f/1.8 above) and third party lenses had more autofocus variation. Newer lens designs, like the 100mm IS L had less autofocus variation (on 5DII bodies, at least – this might not apply to other bodies).

Oh, and back to the people who wanted one of the top 3 copies: when I tested two of those repeatedly, I never again got numbers quite as good as those first numbers shown on the graph. The repeated images (including manual focus) were more towards the center of the range, although they did stay in the top half of the range, at least on this camera, which provides me an exceptionally skillful segue into the next section. (My old English professor would be proud. Not of my writing skills, but simply that I used segue in a sentence.)

Camera to Camera Variation

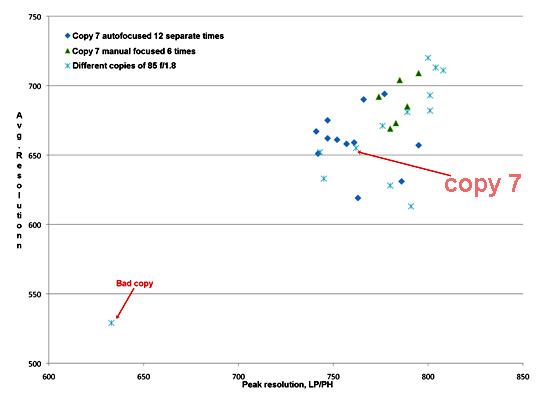

Well, we’ve looked at different lenses on one camera body, but what happens if we use one lens and change camera bodies? I had a great chance to test that when we got a shipment of a dozen new Canon 5D Mark II cameras in. First, I tested a batch of Canon 70-200 f2.8 IS II lenses on one camera, using 3 trials of live view focusing on each. The best results for each lens are shown as green triangles.

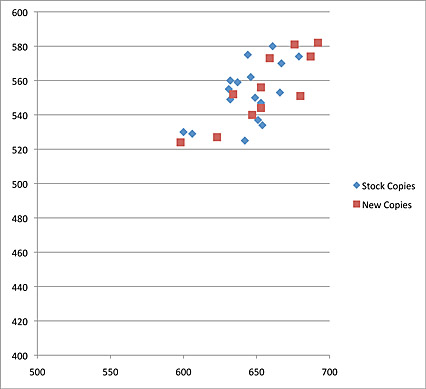

Then I took one of those lenses (mounted to the testing bench by its tripod ring) and repeated the series on 11 of the new camera bodies. The blue diamonds and red boxes this time each represent a different camera on the same lens. (4 test shots were taken with each camera, and while the best is used, each camera’s four shots were almost identical.) Obviously the same lens on a different body behaves a little differently.

A group of Canon 70-200 f2.8 IS II lenses tested on one body (green triangles) and one of those lenses tested on 11 brand new Canon 5DII bodies (red squares and blue diamonds).

I separated the cameras into two sets because we received cameras from two different serial number series on this day. I don’t know that conclusions are warranted from this small number, but I found the difference intriguing. And maybe worth some further investigation.

Summary

Notice I don’t say conclusion, because this little post isn’t intended to conclude anything. It simply serves as an illustration showing visually what we all (or at least most of us) already know:

- Put different copies of the same lens on a single camera and each will vary a bit in resolution.

- Put different copies of the same camera on a single lens and each will vary a bit in resolution.

- Truly bad lenses aren’t a little softer, they are way softer.

- Autofocus isn’t as accurate as live view focus, at least when the camera has not been autofocus microadjusted to the lens.

All of this needs to be put in perspective, however. If you go back to the first two charts, you’ll notice the bad copies are far different than the shotgun pattern shown by all the good copies. And when we looked at those two bad copies, we had to look fairly carefully (looking at 50% jpgs on the monitor) to see they were bad.

The variation among “good copies” could probably be detected by some pixel peeping. For example if you examined the images shot by the best and worst Canon 100 f2.8 IS L lenses you could probably see a bit of difference if you looked at the images side-by-side (the images I took on my test camera). But if I handed you the two lenses and you put them on your camera, they’d behave slightly differently and the results would be different.

So for those of you who spend your time worried about getting “the sharpest possible lens”, unfortunately sharpness is rather a fuzzy concept.

Roger Cicala

Lensrentals.com

October, 2011

Addendum:

Matt’s comment made me realize I hadn’t talked about one obvious variable in this little post: how much of the variation is caused by the fact that these are rental lenses that have been used? The answer (at least for Canon prime lenses) is not much, if at all. For example the graph below compares a set of brand new Canon 35mm f/1.4 lenses tested the day we received them (red boxes) to a set taken off of the rental shelves (blue diamonds).

Please note I make this statement only for Canon prime lenses. Zooms are more complex and I see at least one zoom lens that doesn’t seem to be aging well, but until I get more complete numbers to confirm what I think I’m seeing I won’t say more. I see no reason to expect other brands to be different, but at this point we’ve only been able to test Canon lenses (these tests are pretty time consuming and we have a lot of lenses).

Author: Roger Cicala

I’m Roger and I am the founder of Lensrentals.com. Hailed as one of the optic nerds here, I enjoy shooting collimated light through 30X microscope objectives in my spare time. When I do take real pictures I like using something different: a Medium format, or Pentax K1, or a Sony RX1R.

Pingback: Notes on Lens and Camera Variation - LensRentals.com | Photography Gear News | Scoop.it()

Pingback: Blog | Ideal Focus()