Equipment

Sigma 135mm f/1.8 Art MTF Charts (and a Look Behind the Curtain)

I know there are a lot of people who want to see what the MTF charts on the new Sigma 135mm DG HSM f/1.8 Art lens look like. We just did our initial screening test on ten copies, so I’ll show you what we found, and give you some comparisons to some other 135mm lenses. This also presented a good chance to show you something I’ve talked about but haven’t really shown you; the process we use when we do this to set standards for new lenses in-house.

Now, as always, when I show you some stuff from behind the curtain, you have to swear the Solemn Lensrentals Anti-Fanboy Oath, because with great power comes great responsibility. So you agree you won’t make stupid claims that you’re worried about copy-to-copy variation of this particular lens because these results are GOOD. If I showed you what we go through with bad ones you’d lose your mind; you aren’t ready for bad yet. So everyone say thank you to Sigma for making a lens with good enough quality control that I’m willing to show you the process. In this case we end up testing 11 lenses to use 10 for our database. This isn’t unusual. There are many brand-name lenses where we test 16 or 17 copies before we’re comfortable and a couple of cases where I didn’t accept standards until we’d tested 35 or 40 copies. We hate those lenses very, very much.

So if you’re interested in the process we go through, I’ll use this post to demonstrate it a bit. If you’re not, you can skip the ‘how and why I do this’ section.

Let’s Start with How and Why I Do These Tests

We start standards testing by getting ten brand new copies of the lens. Then I set up the optical bench for the lens and on each copy run a distortion test and run the MTF at four different rotations, so we get a side-to-side, top-to-bottom, and two corner-to-corner tests for each lens.

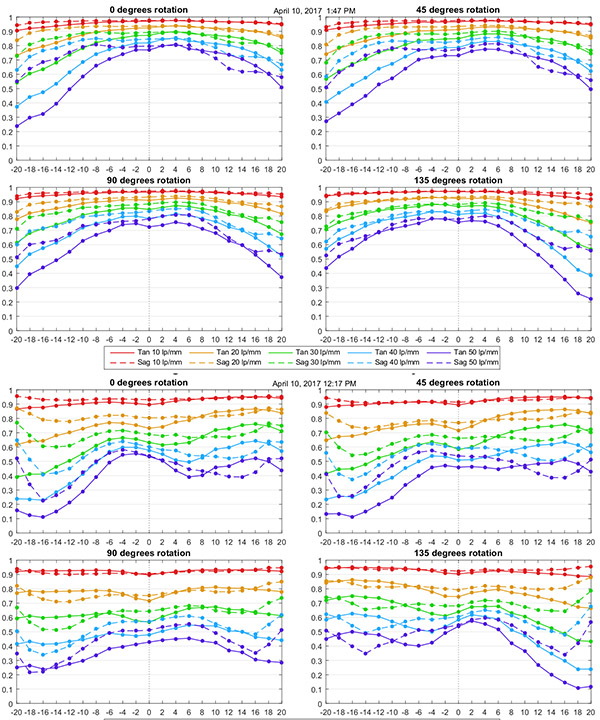

Here are the results of the first two lenses. You don’t have to be an MTF wizard to figure out the four graphs on top, from the first lens, are different than the four on the bottom, from the second.

Olaf Optical Testing, 2017

Most of you probably think, the second one is obviously a bad copy, because jumping to conclusions on inadequate data is fun and easy. But in the big picture of all lenses, the second one isn’t obviously horrid even though it is obviously not as sharp. It’s fairly even with a weak corner, but the weakness only shows at higher frequencies, which means it’s going to be subtle. The red (10 line pairs) lines aren’t dramatically worse even in the bad corner. There’s no apparent decentering. If you bought this lens and tested it you might, or might not, think it wasn’t as sharp as it should be and if you were really, really careful in your testing might detect that bad corner. If you had both lenses to test side-by-side you’d prefer the first one for certain.

But at this point, I don’t know if this lens just varies a bit, and we’re seeing the range of variation here. Or is the second one an outlier? That’s why we test multiple copies and that’s also why we don’t jump to conclusions; much. So I proceeded to test the next 8 copies and other than glancing at the MTF charts to make sure they were valid, I tried not to pay much attention to the data at this point. Once they were done, I ran our 30 lp/mm full frame displays for all 10 lenses and compared them visually.

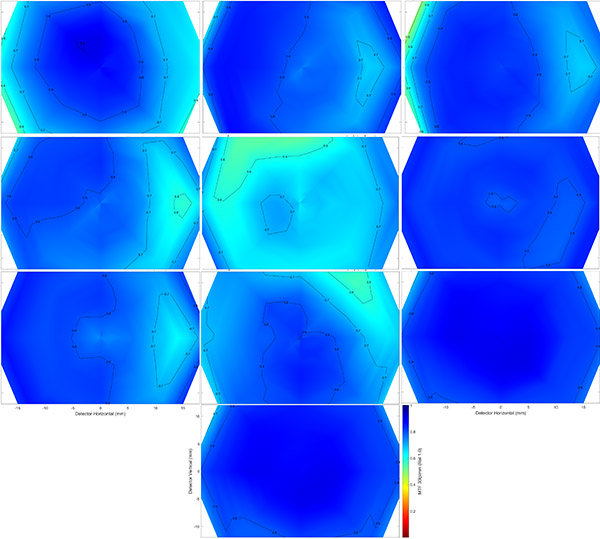

Olaf Optical Testing, 2017

If you haven’t read our blog lately, there are in-depth discussions of what these graphs show in several recent posts, but just consider it a thumbnail of overall sharpness across the lens field with dark blue being the sharpest. That second lens I tested is the one in the center and clearly not as good as the others, at least by this visual inspection. At this point, I begin to think it’s falling into the ‘bad copy’ range, rather than an acceptable variation of the lens range.

But to be more certain, we run each of the lenses compared to the variation of all the other lenses. When I compare this questionable lens (the lines in the graph below) to the range of the other lenses, it becomes clear it falls outside the range. So it was eliminated from the testing group and replaced with another lens, and the results of that group are what I will publish and what becomes our in-house standard for this lens.

You may be interested to know that I gave all 10 of these lenses to one of our more experienced techs and asked him to test them on a high resolution test chart. He said they all passed. When I told him one was bad and to check again, he did identify this one, but described it as ‘just a bit softer, but still fine’. I mention this because part of what you’re seeing here is that this lens is really, really good. Even the worst copy looked OK optically to a very experienced tech. It’s just not quite as good as the others. This is a bit unusual, since a true bad copy usually has some signs of side-to-side variation or decentering, and this lens really didn’t. You could make an argument that this lens is actually OK and I’m just overly picky, and you might be right.

Olaf Optical Testing, 2017

OK, this concludes the ‘why and how we do this’ portion of today’s program. Now lets look at what I found for our set of 10 copies of the Sigma 135mm f/1.8 Art.

Sigma 135mm f/1.8 Art MTF Results

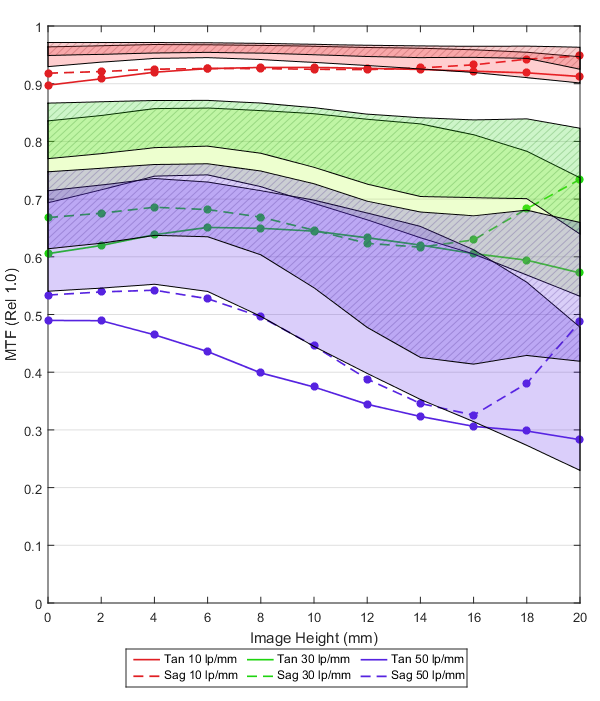

Olaf Optical Testing, 2017

The more MTF savvy among you will notice the center astigmatism that I discussed in our previous blog post on the Tamron 70-200mm zoom. This is partly because with longer lenses the optical center may be a bit off axis from the geometrical center and rotation causes this effect. That is not a problem with the lenses, more of a testing artifact. There also were a couple of copies where there was a bit of central astigmatism that resolved off axis.

The MTF results are excellent. There is superb resolution both at lower frequencies (contrast) and higher frequencies (fine detail). There is a small astigmatism-like separation between sagittal and tangential lines which may be true astigmatism or lateral color, but it’s minor. The MTF is maintained impressively well all the way to the corners.

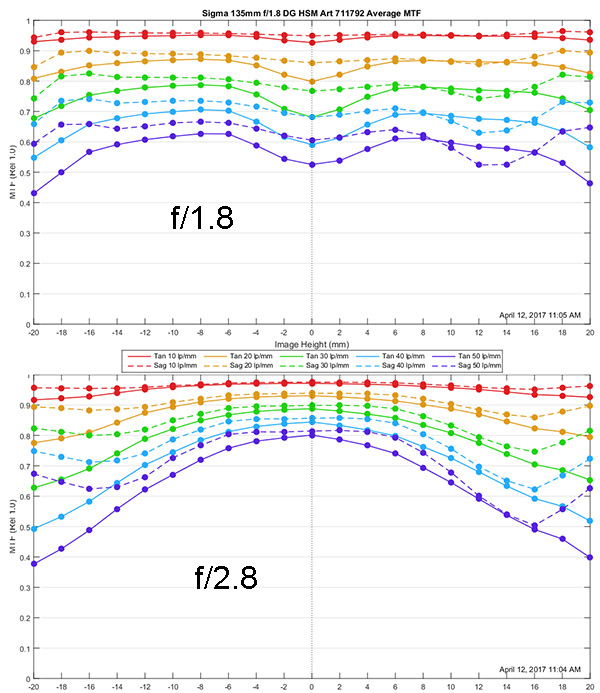

Everyone always wants to know about stop-down performance and I always explain I don’t have time to redo the test at every aperture, but I’ll try a bit of a compromise here. I’ve taken one of the ‘about average’ copies and run it at f/2.8. Here you can see that copy’s MTF both wide open and stopped down to f/2.8. It’s really a lot sharper at f/2.8. Who would have thought.

Olaf Optical Testing, 2017

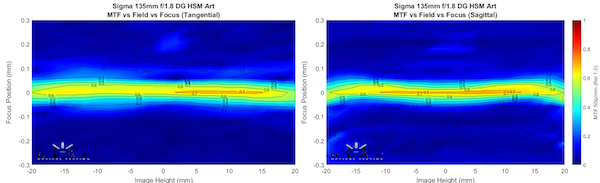

Finally, here are the field of focus plots for both sagittal and tangential MTFs. Like a 135mm lens should be, these are nearly perfectly flat.

Olaf Optical Testing, 2017

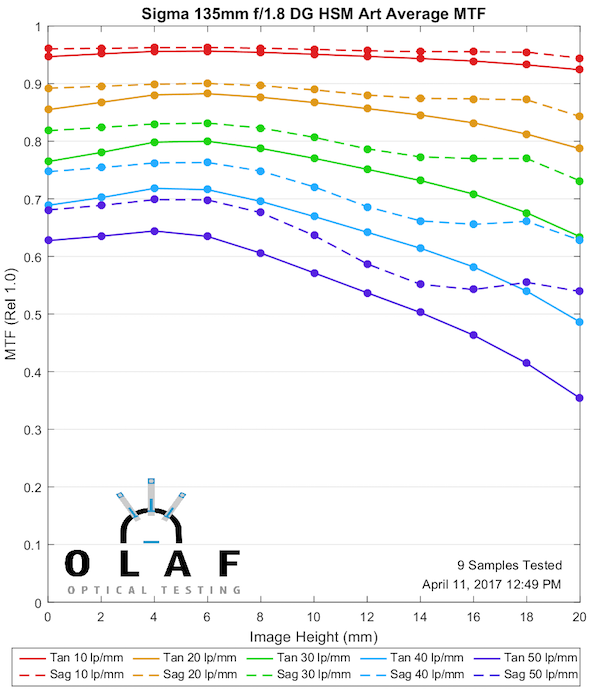

And Finally Some Comparison Plots

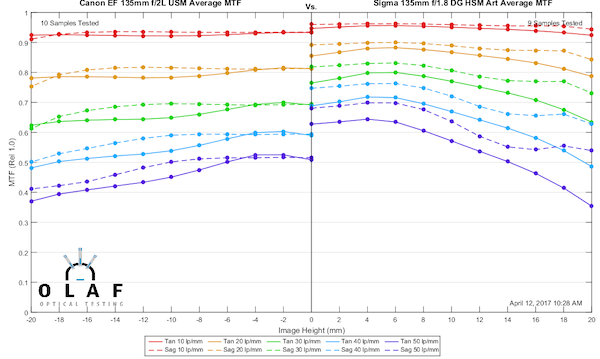

First we’ll compare the Sigma 135mm f/1.8 Art to the venerable Canon 135mm f/2 L, a lens that I unabashedly have claimed as my favorite lens for over a decade. The Sigma’s MTF is clearly better, even though it’s at a slightly wider aperture. Does that mean I’m giving up my tried and true 135mm? Well, I don’t know, but it means I’m at least taking the Sigma for a tryout. That’s really a big difference. But I do love my Canon and there’s more to images than MTF, and the Sigma is kind of chunky and actually a bit more expensive and . . . . damn, that Sigma MTF looks good.

Olaf Optical Testing, 2017

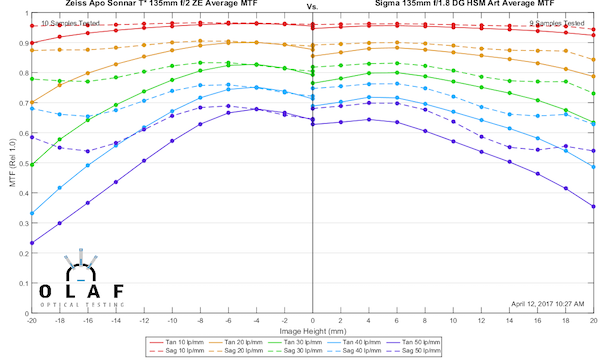

The next obvious comparison is the Sigma 135mm f/1.8 Art with the Zeiss 135mm f/2 Apo Sonnar. Things are a bit more even here, although again keep in mind the Sigma is being tested at a slightly wider aperture. The difference between the two is pretty minor, although the Sigma may be a little better at the edges. Price is about the same here, so the difference is going to be more about how they render, and if you want autofocus with your 135mm.

Olaf Optical Testing, 2017

One last reasonable comparison is to the Rokinon 135mm f/2 — actually, in this case, the Rokinon 135mm T2.2 Cine DS Lens because that’s what I have data on, but it’s the same optics. This one is a close call. Of course, the Rokinon is a lot cheaper. On the other hand, the Sigma autofocuses much better and has better build quality. Still, I want to give Rokinon proper praise for making a superb lens at a superb price.

Olaf Optical Testing, 2017

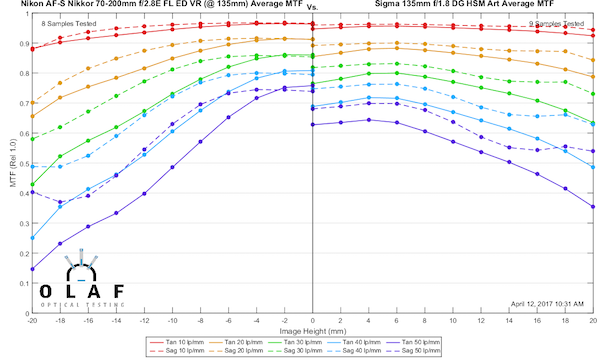

I’ll give you one more direct comparison; one that is meaningless except that I have been harping on ‘zooms aren’t primes’ lately. This is the Sigma 135mm Art at f/1.8 against the best zoom that exists at 135mm, the Nikon 70-200 f/2.8E FL ED. Even at a dramatically wider aperture, the Sigma is better away from center. If you scroll back up the f/2.8 MTF chart I posted above, at f/2.8 the Sigma is just completely better. Zooms are convenient, very good, and very useful lenses. But they aren’t primes, and they never will be.

Olaf Optical Testing, 2017

So What Did We Learn Today?

Well, testing for sample variation has a bit of judgment and a lot of math in it, and there’s a reason why I (with few exceptions) insist on testing ten copies if I’m going to test.

We learned the Sigma 135mm f/1.8 has a really nice MTF curve, better than the Canon 135mm f/2 L. It’s as good at f/1.8 as the Zeiss or Rokinon are at f/2, and it autofocuses, which they don’t. Whether you want it or not is going to depend on a lot of other factors, but the MTF curves are promising. And we learned, to my sadness, that father time catches up with everything, including my favorite Canon 135mm f/2.0 which is showing it’s age a bit when compared to these newcomers.

And we learned that Roger is never going to shut up about ‘zooms aren’t primes’.

Roger Cicala and Aaron Closz

Lensrentals.com

April, 2017

Author: Roger Cicala

I’m Roger and I am the founder of Lensrentals.com. Hailed as one of the optic nerds here, I enjoy shooting collimated light through 30X microscope objectives in my spare time. When I do take real pictures I like using something different: a Medium format, or Pentax K1, or a Sony RX1R.

-

Paulo Basseto

-

CheshireCat

-

Robson Robson

-

Roger Cicala

-

Samuel H

-

CheshireCat

-

Aaron Collins

-

Roger Cicala

-

Markus Baumgartner

-

Roger Cicala

-

Bob B.

-

bdbender4

-

Patrick Chase

-

Greg Dunn

-

Ketan Gajria

-

Roger Cicala

-

Ketan Gajria

-

Patrick Chase

-

Patrick Chase

-

Patrick Chase

-

Ed Bambrick

-

Roger Cicala

-

Tevin Limon

-

Omesh Singh

-

Roger Cicala

-

Bob B.

-

Brandon Dube

-

Roger Cicala

-

Roger Cicala

-

Zwielicht