Equipment

Do Recent Advancements in Upscaling Make the Megapixel Race Obsolete?

If you’ve been in the photography world for any amount of time over the last 15 years, you’ve probably heard the term ‘megapixel race’. Starting back in the early 2000s with the dawn of digital photography, the megapixel race was the race in which each manufacturer of cameras, from point-and-shoots, to DSLRs, to smartphones, competed by showing off how many megapixels each of their cameras offered.

And for the start of these races, megapixel count was considered the most important metric of a camera. With each marginal increase in resolution with new generations of cameras, the ‘Nikon vs. Canon’ debates would reignite, and Camera A could ultimately be considered better solely based on the resolution it could shoot. While I was once a soldier in this fight, I’ve long lost my will in the fight, so for all I know, these silly internet debates could still be going on. But let’s take this opportunity to ask the question, with AI, and advancements in upscaling technologies, does resolution matter anymore?

What Do Megapixels Mean Though?

Megapixel is a metric that measures the overall resolution potential of a digital camera. Meaning literally ‘1 million pixels’, megapixels are a unit of measurement that works with dots per inch (or DPI) to determine how large the images are that come from the camera. An individual pixel is a single square of color information – but when lined up in large squares, these pixels form a mosaic, which then makes your image.

Because of the law of diminishing returns, megapixels used to be inherently more important for image quality than they are now. As you can imagine, the jump from 3 megapixels to 5 megapixels is a 66% increase in resolution from one to the next, and so the image quality would inherently increase as well, simply by the added details captured. But the jump from 24-megapixels to 26-megapixels is far less significant by comparison.

AI And Upscaling Advancements

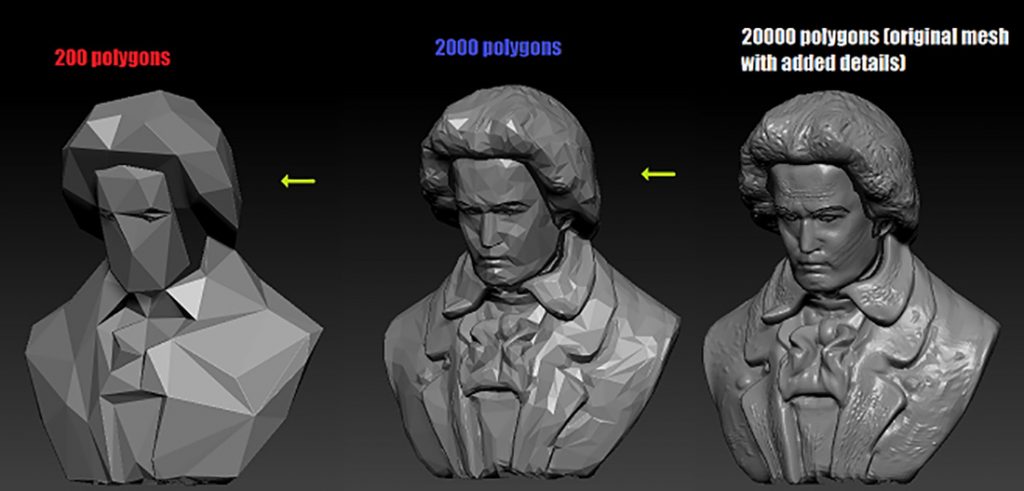

In recent years, upscaling has gotten significantly better than it was before, thanks to several advancements – from machine learning to the high detail that modern lenses can capture. Upscaling is the process of increasing the size of an image, which usually means you’ll be stretching the pixels to fit a larger space than they were originally intended for. In the past, if you were to upscale an image, and for example, double the resolution size of the image, you’d often get a softness from the image. This is because all you’re doing is stretching those pixels to fit a space larger than they were intended to take, meaning anything lacking sharpness will have those areas compounded, giving you more softness, and a loss of detail. But in the last few years, machine learning and other tools have made upscaling far more successful, as these pieces of software analyze the color data, and upscale the image in new ways to retain better sharpness. While these pieces of software don’t promise that you can take a 3-megapixel image from an archive drive, and turn it into a 24-megapixel image from a modern standard, they do promise to help you save some images that might be unusable based on their lower resolution.

Testing Upscaling

So I decided to test these upscalers to see how good they’ve become in recent years. Those who might follow my work, know that I shoot predominately in studio, using the Fuji GFX 100s – a 102-megapixel medium format system that could be regarded as the current winner of the megapixel race. So to test these upscalers, I’m going to take some of my full-resolution images, downscale them to be roughly 30 megapixels, and then use the individual upscalers to bring them back up to 100 megapixels to see which one does the best job.

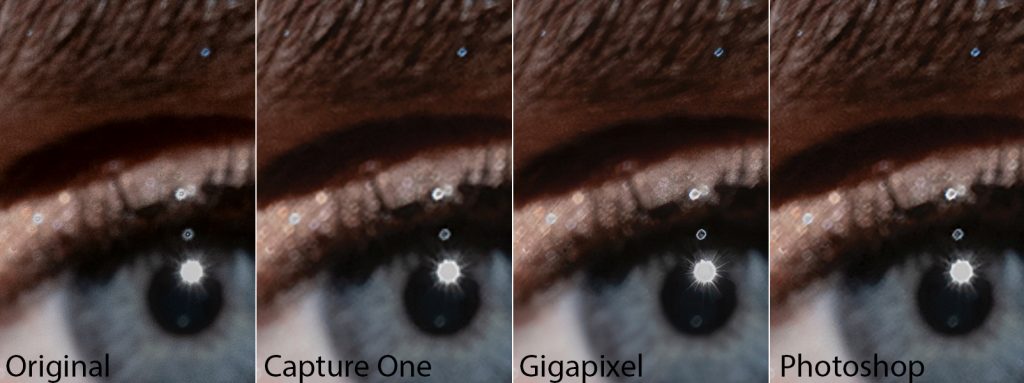

For this test, I’ll be using a couple of different pieces of software – Capture One basic upscaling, Adobe Photoshop 2023, and Topaz Labs Gigapixel AI and comparing them to the original image. Because I cannot show 100-megapixel images on this blog (for loading reasons), I’ll instead opt for crops of each image to give the best representation of each piece of software. But first, let’s look at the tools and techniques I’ll be using for each upscale.

Capture One Pro

Unlike the other two pieces of software that have machine learning built into their software to handle upscaling, Capture One Pro seems to focus on the old-fashioned way of upscaling – stretching the pixels. For that reason, Capture One Pro will largely be used as a control for this test. For their software, I’ll simply be taking the downscaled image, importing it into Capture One, and then exporting it with the resolution increase set.

Adobe Photoshop 2023

Adobe has shown off some incredible AI tools within their latest release of Photoshop – one of those recent updates comes with their “Super Resolution” tool, which promises a 4x increase in resolution for photos. So for that reason, I’ll be using that toolset, which is traditionally found in the ‘Enhance’ menu of the RAW image importer.

Topaz Labs Gigapixel AI

Finally, I’ll be testing Gigapixel from Topaz Labs – which seems to be the favorite from forum and Reddit posts. Topaz Labs makes use of AI to increase the resolution of images using a couple of different methods available depending on what you’re looking to have done. I’ll be using the most practical methods based on the images tested, which is ironically called High Res.

The Tests

I took four images from my portfolio, downscaled all the images to roughly 30 megapixels, and then upscaled them back to their original 100-megapixel size, below are the results of these tests. Clicking on the comparison image should open them up in full resolution.

Image 1

Image 2

Image 3

Image 4

Results

Given the massive resolution of the images, I had to take tight crops of each photo to make the comparison. I looked for places on the photos that contained the smallest details, as it’s usually where upscaling struggles the most. Overall, I was pretty impressed with all of the upscaling options available, but it seems that Gigapixel did far and wide the best overall. In particular, Gigapixel did the best in those micro contrasts in the images (though it admittedly struggles a little in color options (the lashes on Image 2 are a good example).

But to go back to the question in the title of the article – is the megapixel wars finally over? Yes, I think so. As long as you have a camera that can shoot 25-30MP, you should easily be able to upscale the images into larger formats without anyone noticing. The introduction of machine learning over the last few years has made upscaling better than ever before, and as such, your megapixel count is less important than ever.

But which upscaling option was your favorite? Feel free to chime in in the comments below.

Related Reading

- How AI Tools are Making Retouching Easier Than Ever

- Lensrentals.com Reviews the FujiFilm GFX 100S

- Testing Davinci Resolve’s New AI Transcription

- Is AI Art Generation Going to Destroy Art as We Know It?

Author: Zach Sutton

I’m Zach and I’m the editor and a frequent writer here at Lensrentals.com. I’m also a commercial beauty photographer in Los Angeles, CA, and offer educational workshops on photography and lighting all over North America.-

Mike Earussi

-

Alec Kinnear

-

User Colin

-

Alex Greenfield

-

Eric Bowles

-

ShullBit

-

GaryW

-

Franck Mée

-

Steven Kornreich